Difference between revisions of "Dense matrix-vector multiplication"

| [quality revision] | [quality revision] |

| Line 1: | Line 1: | ||

| − | Primary authors of this description: [[:ru:Участник:Frolov|A.V.Frolov]], [[:ru:Участник:VadimVV|Vad.V.Voevodin]] ([[#Locality of data and computations|Section 2.2]]), [[:ru:Участник:Teplov|A.M.Teplov]] ( | + | Primary authors of this description: [[:ru:Участник:Frolov|A.V.Frolov]], [[:ru:Участник:VadimVV|Vad.V.Voevodin]] ([[#Locality of data and computations|Section 2.2]]), [[:ru:Участник:Teplov|A.M.Teplov]] ([[#Scalability of the algorithm and its implementation|Section 2.4]]) |

== Description of the properties and structure of the algorithm == | == Description of the properties and structure of the algorithm == | ||

Revision as of 10:54, 20 July 2015

Primary authors of this description: A.V.Frolov, Vad.V.Voevodin (Section 2.2), A.M.Teplov (Section 2.4)

Contents

- 1 Description of the properties and structure of the algorithm

- 1.1 General description

- 1.2 Mathematical description

- 1.3 Computational kernel of the algorithm

- 1.4 Macrostructure of the algorithm

- 1.5 Implementation scheme of the serial algorithm

- 1.6 Serial complexity of the algorithm

- 1.7 Information graph

- 1.8 Parallelism resource of the algorithm

- 1.9 Description of the input and output data

- 1.10 Properties of the algorithm

1 Description of the properties and structure of the algorithm

1.1 General description

Matrix-vector multiplication is one of the basic procedures in algorithmic linear algebra, which is widely used in a number of different methods. Here, we consider the product [math]y = Ax[/math] of a dense real matrix and a vector (serial real version), that is, the version in which neither a special form of the matrix nor the associative properties of the addition operation are used.

1.2 Mathematical description

Input data: dense matrix [math]A[/math] of size m-by-n (with entries [math]a_{ij}[/math]), its vector cofactor [math]x[/math] of dimension n (with components [math]x_{i}[/math]).

Output data: vector [math]y[/math] of dimension m (with components [math]y_{i}[/math]).

Formulas of the method:

- [math] \begin{align} y_{i} = \sum_{j = 1}^{n} a_{ij} x_{j}, \quad i \in [1, m]. \end{align} [/math]

There also exists a block version of the method; however, this description treats only the pointwise version.

1.3 Computational kernel of the algorithm

The computational kernel of the dense matrix-vector multiplication can be compiled of repeated dot products of the rows of [math]A[/math] and the vector [math]x[/math] (there are on the whole [math]m[/math] such products):

- [math] \sum_{j = 1}^{n} a_{ij} x_{j}[/math]

Depending on the problem requirements, these sums are calculated with or without using the accumulation mode.

1.4 Macrostructure of the algorithm

As already noted in the description of the computational kernel, the basic part of the dense matrix-vector multiplication is compiled of repeated dot products of the rows of [math]A[/math] and the vector [math]x[/math] (there are on the whole [math]m[/math] such products):

- [math] \sum_{j = 1}^{n} a_{ij} x_{j}[/math]

These calculations are performed with or without using the accumulation mode.

1.5 Implementation scheme of the serial algorithm

For all [math]i[/math] from [math]1[/math] to [math]m[/math], do

- [math]y_{i} = \sum_{j = 1}^{n} a_{ij} x_{j}[/math]

We emphasize that sums of the form [math]\sum_{j = 1}^{n} a_{ij} x_{j}[/math] are calculated in the accumulation mode by adding the products [math]a_{ij} x_{j}[/math] to the current (temporary) value of the component [math]y_{i}[/math]. The index [math]j[/math] increases from [math]1[/math] to [math]n[/math]. All the sums are initialized to zero. The general scheme is virtually the same if summations are performed in the decreasing order of [math]j[/math]; therefore, this case is not considered. Other orders of summation change the parallel properties of the algorithm and are considered in separate descriptions.

1.6 Serial complexity of the algorithm

The multiplication of a square matrix of order [math]n[/math] (that is, [math]m=n[/math]) by a vector of the same dimension in the (fastest) serial version requires

- [math]n^2[/math] multiplications and the same number of additions.

The multiplication of an [math]m[/math]-by-[math]n[/math] matrix by a vector of dimension [math]n[/math] in the (fastest) serial version requires

- [math]mn[/math] multiplications and the same number of additions.

The use of accumulation requires that multiplications and additions be done in the double precision mode (or such procedures as the Fortran function DPROD be used). This increases the computation time for performing the matrix-vector multiplication.

In terms of serial complexity, the matrix-vector multiplication is qualified as a quadratic complexity algorithm (or a bilinear complexity algorithm if the matrix is rectangular).

1.7 Information graph

We describe the algorithm graph both analytically and graphically.

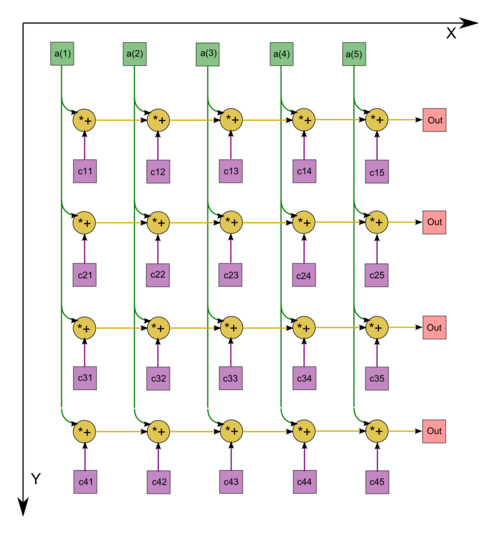

The algorithm graph of multiplying a dense matrix by a vector consists of a single group of vertices placed at integer nodes of a two-dimensional domain. The corresponding operation is [math]a+bc[/math].

The natural coordinates of this domain are as follows:

- [math]i[/math] varies from [math]1[/math] to [math]m[/math], taking all the integer values in this range;

- [math]j[/math] varies from [math]1[/math] to [math]n[/math], taking all the integer values in this range.

The arguments of the operation are as follows:

- [math]a[/math] is:

- the constant [math]0[/math] if [math]j = 1[/math];

- the result of performing the operation corresponding to the vertex with coordinates [math]i, j -1[/math] if [math]k \gt 1[/math];

- [math]b[/math] is the element [math]a_{ij}[/math] of the input data;

- [math]c[/math] is the element [math]x_{j}[/math] of the input data.

The result of performing the operation is:

- an intermediate data item if [math]j \lt n[/math];

- an output data item [math]c_{ij}[/math] if [math]j = n[/math].

The graph described can be seen in the figure corresponding to the case [math]m = 4, n = 5[/math]. The vertices are depicted in blue. Only the supplies of the input data from the vector [math]x[/math] are shown. The supplies of the entries of [math]A[/math] to all the vertices are not shown.

1.8 Parallelism resource of the algorithm

The parallel version of the algorithm for multiplying a square matrix of order n by a vector requires that the following layers be successively performed:

- [math]n[/math] layers of multiplications and the same number of layers for addition (there are [math]n[/math] operations in each layer).

The multiplication of an [math]m[/math]-by-[math]n[/math] matrix by a vector of dimension [math]n[/math] in the (fastest) serial version requires

- [math]n[/math] layers of multiplications and the same number of layers for addition (there are [math]m[/math] operations in each layer).

The use of accumulation requires that multiplications and additions be done in the double precision mode. For the parallel version, this implies that virtually all the intermediate calculations in the algorithm must be performed in double precision if the accumulation option is used. Unlike the situation with the serial version, this results in a certain increase in the required memory size.

In terms of the parallel form height, the dense matrix-vector multiplication is qualified as a linear complexity algorithm. In terms of the parallel form width, its complexity is also linear.

1.9 Description of the input and output data

Input data: matrix [math]A[/math] (with entries [math]a_{ij}[/math]), vector [math]x[/math] (with components [math]x_{i}[/math]).

Size of the input data : [math]mn+n[/math] .

Output data : vector [math]y[/math] (with components [math]y_{i}[/math]).

Size of the output data: [math]m[/math].

1.10 Properties of the algorithm

It is clear that, in the case of unlimited resources, the ratio of the serial to parallel complexity is linear (since this is the ratio of the quadratic or bilinear complexity to linear one).

The computational power of the dense matrix-vector multiplication, understood as the ratio of the number of operations to the total size of the input and output data, is only a constant.

The algorithm of dense matrix-vector multiplication is completely determined. We do not consider any other order of performing associative operations in this version.