Difference between revisions of "Linpack benchmark"

| [unchecked revision] | [checked revision] |

| (45 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{level-a}} | {{level-a}} | ||

| − | Primary authors of this description: [[:ru:Участник:Frolov|A.V.Frolov]] | + | Primary authors of this description: [[:ru:Участник:Frolov|A.V.Frolov]]. |

== Properties and structure of the algorithm == | == Properties and structure of the algorithm == | ||

| Line 7: | Line 7: | ||

=== General description of the algorithm === | === General description of the algorithm === | ||

| − | '''Linpack benchmark''' | + | '''Linpack benchmark''' is a test developed as a supplement to the Linpack library for testing the performance of computer systems. Actually, this test generates a system of linear algebraic equations (SLAE) <math>A \vec{x} = \vec{b}</math> with a random dense square matrix <math>A</math> and a vector <math>\vec{b}</math> chosen so that the solution of the system is the vector <math>\vec{x}</math> all of whose components are ones; then this SLAE is solved. In view of the general (with certain provisos) nature of the matrix, the solution of the system is divided into several stages. First, the <math>PLU</math>-decomposition of <math>A</math> is calculated by using Gaussian elimination with column pivoting (<math>P</math> is a permutation matrix, <math>L</math> is a lower triangular matrix with unit diagonal, and <math>U</math> is an upper triangular matrix). At the second stage, the calculated decomposition is used to solve the SLAE <math>P L U \vec{x} = \vec{b}</math>. At the third stage, the residual <math>\vec{r} = A \vec{x} - \vec{b}</math> and the related characteristics are calculated. Then the data concerning the resulting accuracy and performance are produced. The performance is only estimated for the main part of the algorithm; that is, the calculations spent on the residual and norms are ignored. |

| − | + | The Linpack benchmark, which was developed for testing computers of traditional architecture, should not be confused with High Performance Linpack benchmark. The latter was devised for testing parallel computer systems and is based on a different (albeit similar) algorithm. | |

| − | === Mathematical description of the algorithm === | + | === Mathematical description of the algorithm === |

| + | Input data: square matrix <math>A</math> (with entries <math>a_{ij}</math>) (in 1992 version, the random number generator is chosen so that to ensure the non-singularity of <math>A</math>), the right-hand side vector <math>\vec{b}</math> (with components <math>b_i</math>). Actually, they are not exactly input data (because the entries of the matrix are pseudo-random numbers generated by the program itself; these numbers are contained in the interval (-1/2, +1/2), and the right-hand sides are calculated as <math>b_i = \sum\limits_{j = 1}^i a_{ij}</math>). However, in order to analyze the general scheme of the algorithm, it is convenient to regard <math>A</math> and <math>\vec{b}</math> as the input data. | ||

| − | + | Data to be calculated: lower triangular matrix <math>L</math> (with entries <math>l_{ij}</math>), upper triangular matrix <math>U</math> (with entries <math>u_{ij}</math>), permutation matrix <math>P</math> (which is calculated as a collection of pivot indices rather than a full square array), and the solution vector <math>x</math> (with components <math>x_i</math>). | |

| − | + | Here, we do not give detailed formulas of individual parts of the algorithm. These parts are independent algorithms, and their detailed descriptions should be presented separately. Consequently, we only discuss the general scheme of the algorithm. | |

| − | + | The first part — decomposing the matrix into the product of two triangular matrices and a permutation matrix: <math>A = P L U</math> — is performed by using Gaussian elimination with column pivoting. The permutation matrix is stored as a vector containing information on the performed permutations. The matrix <math>L</math> is lower triangular with unit diagonal, while <math>U</math> is an upper triangular matrix. | |

| − | + | The second part is solving two triangular SLAEs. First, the system <math>L \vec{y} = P T \vec{b}</math> is solved by forward substitution, then back substitution is used to solve the system <math>U \vec{x} = \vec{y}</math>. This test stores both triangular matrices in the corresponding portions of the matrix <math>A</math>. For this reason, <math>A</math> is generated again (with the same starting parameters for the random number generator) before passing to the third part. | |

| − | + | The third part (for which the performance is not measured) calculates first the residual of the solution <math>\vec{r} = A \vec{x} - \vec{b}</math> and then its uniform norm, as well as the norm of the solution. On the completion of this stage, the algorithm outputs data related to the accuracy of the calculated solution and evaluates the performance of the system. | |

| − | |||

| − | |||

=== Computational kernel of the algorithm === | === Computational kernel of the algorithm === | ||

| − | + | The main part of the algorithm, which is the decomposition of a matrix via Gaussian elimination with column pivoting, is responsible for the bulk of operations. The second most important part is the calculation of the residual and its norm. It is implemented by the subroutine that calculates the sum of a vector and a matrix-vector product and by the function calculating the uniform norm. Less important parts are forward substitution in the Gauss method and back substitution in this method. | |

=== Macro structure of the algorithm === | === Macro structure of the algorithm === | ||

| − | + | As already said in the description of the computational kernel of the algorithm, the decomposition of a matrix via Gaussian elimination with column pivoting is responsible for the bulk of operations. This decomposition is the first macro operation. It is followed by the forward substitution and, right away, by the back substitution. On the regeneration of the matrix, the next macro operation is calculating the residual for the solution of the SLAE, followed by the calculation the uniform norms of the residual and the solution. | |

=== Implementation scheme of the serial algorithm === | === Implementation scheme of the serial algorithm === | ||

| − | + | The mathematical description of the algorithm was given above. The standard test calculates the triangular decomposition in-place, and the solutions to the triangular systems in the forward and back substitution are written to the locations of the right-hand sides. For this reason, on the calculation of the solution, the coefficient matrix and the right-hand side are regenerated for the subsequent calculation of the residual. As soon as the residual is calculated, its norm and the norm of the solution can be calculated in any order, which does not affect the algorithm and its results. | |

=== Serial complexity of the algorithm === | === Serial complexity of the algorithm === | ||

| − | + | Since the main part of the algorithm is the decomposition of a matrix via Gaussian elimination with column pivoting, the algorithm, as well as this decomposition, has ''cubical'' complexity. Other parts have quadratic complexity; consequently, they do not give a comparable contribution to the overall complexity of the algorithm. | |

=== Information graph === | === Information graph === | ||

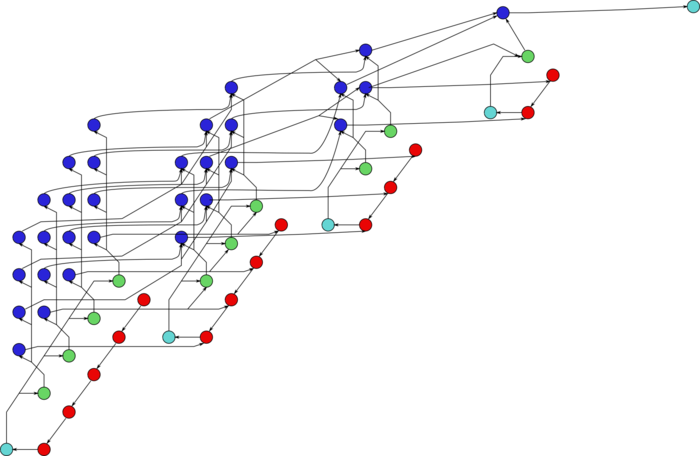

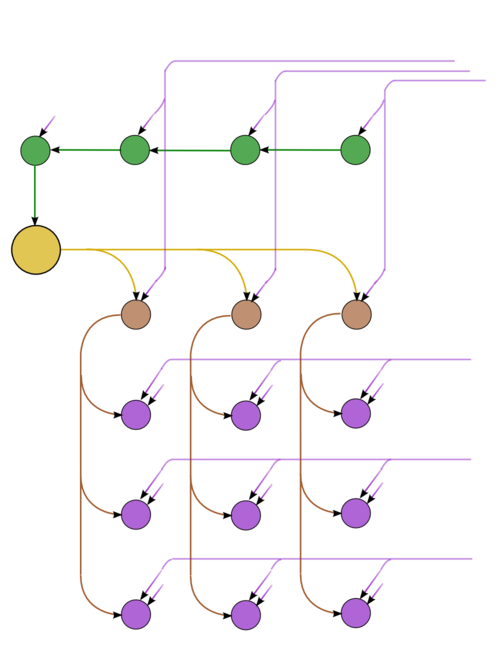

| − | + | The graph consists of the information graphs of its basic parts. The first part (Gaussian elimination with column pivoting) has the most complex graph. Figure 1 shows the simplified graph of this part, post factum the choice of pivots. The operations of finding the maxima in the columns and saving the corresponding positions are given in green. The inversion of the current pivot, multiplications in the current column, and operations of the type <math>a b + c</math> in the other columns are depicted in yellow, brown, and purple, respectively. Figure 2 shows the graph of a layer (one step of the method). The notation is the same as in the full graph. Dotted lines denote data communication from the pivot row. | |

{| align="center" | {| align="center" | ||

|- valign="top" | |- valign="top" | ||

| − | | [[File:Gaussian_elimination.png|700px|thumb| | + | | [[File:Gaussian_elimination.png|700px|thumb|Figure 1. Simplified graph of Gaussian elimination with column pivoting]] |

| − | | [[File:Gaussian_elimination_step.png|500px| | + | | [[File:Gaussian_elimination_step.png|500px|Figure 2. Graph of one step of Gaussian elimination|thumb]] |

|} | |} | ||

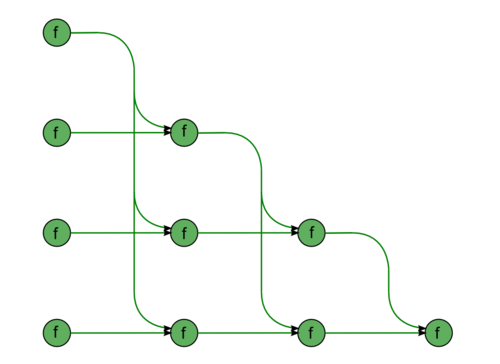

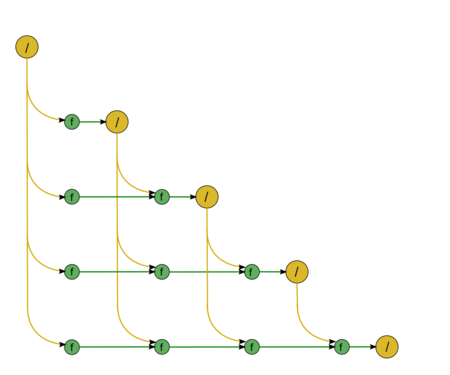

| − | + | The second part consists of almost identical fragments. The first fragment describes the forward substitution, namely, the solution of the SLAE with a lower triangular matrix <math>L \vec{y} = \vec{b}</math> (Fig.3). The diagonal entries of this matrix are ones; consequently, this fragment contains no divisions. All the green nodes correspond to the operations of the type <math>a b + c</math>. The second fragment describes the back substitution, namely, the solution of the SLAE with an upper triangular matrix <math>U \vec{x} = \vec{y}</math> (Fig.4). This matrix has no specific features; hence, the associated graph contains division operations, which are shown in yellow. | |

{| align="center" | {| align="center" | ||

|- valign="top" | |- valign="top" | ||

| − | | [[File:DirectL.png|500px|thumb| | + | | [[File:DirectL.png|500px|thumb|Figure 3. Graph of solving the SLAE with a lower triangular matrix]] |

| − | | [[File:DirectU.png|450px|thumb| | + | | [[File:DirectU.png|450px|thumb|Figure 4. Graph of solving the SLAE with an upper triangular matrix]] |

|} | |} | ||

=== Parallelization resource of the algorithm === | === Parallelization resource of the algorithm === | ||

| − | + | The analysis of parallelization resource of the algorithm shows that Gaussian elimination with column pivoting has the highest, namely, ''quadratic'' complexity (exactly because of the pivoting). The other parts of the algorithm have at most linear complexity. Therefore, to optimize calculations, the virtual researcher must optimize Gaussian elimination with column pivoting. Besides, the first fragment of the second part (that is, the solution of the first triangular SLAE) can be attached to the first part and executed almost entirely in the background of the latter. Indeed, both have the same direction of recalculating the data. However, the rest of the second part, despite its similarity to the first fragment, can only be executed on completion the latter. The reason is that these two fragments have opposite directions of recalculating the data. | |

=== Input and output data of the algorithm === | === Input and output data of the algorithm === | ||

| − | + | Actually, this test has always the same data, which are calculated in the test itself. Consequently, in the strict sense, it has no input data. | |

| − | + | The output data, produced by the test, are the norms of the residual, solution, and matrix, the ratios of these norms, and the computational power of the target computer system. | |

=== Properties of the algorithm === | === Properties of the algorithm === | ||

| Line 75: | Line 74: | ||

=== Implementation peculiarities of the serial algorithm === | === Implementation peculiarities of the serial algorithm === | ||

| − | + | Strictly speaking, the investigator implementing the test for a computer system tests at the same time LINPAC or any other package from which procedures for triangular decomposition and solving a SLAE with the help of this decomposition are called. The web versions of the test can also be used for testing other implementations of the Gauss method. It was already noted above that the triangular decomposition of the original matrix is calculated in-place, and, in the course of forward and back substitution, the solutions are written to the locations of right-hand sides. This causes the necessity to regenerate both the coefficient matrix and the right-hand side for the subsequent calculation of the residual. This organization of the algorithm may well be changed in order to optimize the test. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Possible methods and considerations for parallel implementation of the algorithm === | === Possible methods and considerations for parallel implementation of the algorithm === | ||

| − | === | + | === Run results === |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Conclusions for different classes of computer architecture === | === Conclusions for different classes of computer architecture === | ||

| − | |||

== References == | == References == | ||

| + | |||

<references /> | <references /> | ||

| − | [[Category: | + | [[Category:Articles in progress]] |

[[Ru:Linpack benchmark]] | [[Ru:Linpack benchmark]] | ||

Latest revision as of 16:15, 12 July 2022

Primary authors of this description: A.V.Frolov.

Contents

- 1 Properties and structure of the algorithm

- 1.1 General description of the algorithm

- 1.2 Mathematical description of the algorithm

- 1.3 Computational kernel of the algorithm

- 1.4 Macro structure of the algorithm

- 1.5 Implementation scheme of the serial algorithm

- 1.6 Serial complexity of the algorithm

- 1.7 Information graph

- 1.8 Parallelization resource of the algorithm

- 1.9 Input and output data of the algorithm

- 1.10 Properties of the algorithm

- 2 Software implementation of the algorithm

- 3 References

1 Properties and structure of the algorithm

1.1 General description of the algorithm

Linpack benchmark is a test developed as a supplement to the Linpack library for testing the performance of computer systems. Actually, this test generates a system of linear algebraic equations (SLAE) [math]A \vec{x} = \vec{b}[/math] with a random dense square matrix [math]A[/math] and a vector [math]\vec{b}[/math] chosen so that the solution of the system is the vector [math]\vec{x}[/math] all of whose components are ones; then this SLAE is solved. In view of the general (with certain provisos) nature of the matrix, the solution of the system is divided into several stages. First, the [math]PLU[/math]-decomposition of [math]A[/math] is calculated by using Gaussian elimination with column pivoting ([math]P[/math] is a permutation matrix, [math]L[/math] is a lower triangular matrix with unit diagonal, and [math]U[/math] is an upper triangular matrix). At the second stage, the calculated decomposition is used to solve the SLAE [math]P L U \vec{x} = \vec{b}[/math]. At the third stage, the residual [math]\vec{r} = A \vec{x} - \vec{b}[/math] and the related characteristics are calculated. Then the data concerning the resulting accuracy and performance are produced. The performance is only estimated for the main part of the algorithm; that is, the calculations spent on the residual and norms are ignored.

The Linpack benchmark, which was developed for testing computers of traditional architecture, should not be confused with High Performance Linpack benchmark. The latter was devised for testing parallel computer systems and is based on a different (albeit similar) algorithm.

1.2 Mathematical description of the algorithm

Input data: square matrix [math]A[/math] (with entries [math]a_{ij}[/math]) (in 1992 version, the random number generator is chosen so that to ensure the non-singularity of [math]A[/math]), the right-hand side vector [math]\vec{b}[/math] (with components [math]b_i[/math]). Actually, they are not exactly input data (because the entries of the matrix are pseudo-random numbers generated by the program itself; these numbers are contained in the interval (-1/2, +1/2), and the right-hand sides are calculated as [math]b_i = \sum\limits_{j = 1}^i a_{ij}[/math]). However, in order to analyze the general scheme of the algorithm, it is convenient to regard [math]A[/math] and [math]\vec{b}[/math] as the input data.

Data to be calculated: lower triangular matrix [math]L[/math] (with entries [math]l_{ij}[/math]), upper triangular matrix [math]U[/math] (with entries [math]u_{ij}[/math]), permutation matrix [math]P[/math] (which is calculated as a collection of pivot indices rather than a full square array), and the solution vector [math]x[/math] (with components [math]x_i[/math]).

Here, we do not give detailed formulas of individual parts of the algorithm. These parts are independent algorithms, and their detailed descriptions should be presented separately. Consequently, we only discuss the general scheme of the algorithm.

The first part — decomposing the matrix into the product of two triangular matrices and a permutation matrix: [math]A = P L U[/math] — is performed by using Gaussian elimination with column pivoting. The permutation matrix is stored as a vector containing information on the performed permutations. The matrix [math]L[/math] is lower triangular with unit diagonal, while [math]U[/math] is an upper triangular matrix.

The second part is solving two triangular SLAEs. First, the system [math]L \vec{y} = P T \vec{b}[/math] is solved by forward substitution, then back substitution is used to solve the system [math]U \vec{x} = \vec{y}[/math]. This test stores both triangular matrices in the corresponding portions of the matrix [math]A[/math]. For this reason, [math]A[/math] is generated again (with the same starting parameters for the random number generator) before passing to the third part.

The third part (for which the performance is not measured) calculates first the residual of the solution [math]\vec{r} = A \vec{x} - \vec{b}[/math] and then its uniform norm, as well as the norm of the solution. On the completion of this stage, the algorithm outputs data related to the accuracy of the calculated solution and evaluates the performance of the system.

1.3 Computational kernel of the algorithm

The main part of the algorithm, which is the decomposition of a matrix via Gaussian elimination with column pivoting, is responsible for the bulk of operations. The second most important part is the calculation of the residual and its norm. It is implemented by the subroutine that calculates the sum of a vector and a matrix-vector product and by the function calculating the uniform norm. Less important parts are forward substitution in the Gauss method and back substitution in this method.

1.4 Macro structure of the algorithm

As already said in the description of the computational kernel of the algorithm, the decomposition of a matrix via Gaussian elimination with column pivoting is responsible for the bulk of operations. This decomposition is the first macro operation. It is followed by the forward substitution and, right away, by the back substitution. On the regeneration of the matrix, the next macro operation is calculating the residual for the solution of the SLAE, followed by the calculation the uniform norms of the residual and the solution.

1.5 Implementation scheme of the serial algorithm

The mathematical description of the algorithm was given above. The standard test calculates the triangular decomposition in-place, and the solutions to the triangular systems in the forward and back substitution are written to the locations of the right-hand sides. For this reason, on the calculation of the solution, the coefficient matrix and the right-hand side are regenerated for the subsequent calculation of the residual. As soon as the residual is calculated, its norm and the norm of the solution can be calculated in any order, which does not affect the algorithm and its results.

1.6 Serial complexity of the algorithm

Since the main part of the algorithm is the decomposition of a matrix via Gaussian elimination with column pivoting, the algorithm, as well as this decomposition, has cubical complexity. Other parts have quadratic complexity; consequently, they do not give a comparable contribution to the overall complexity of the algorithm.

1.7 Information graph

The graph consists of the information graphs of its basic parts. The first part (Gaussian elimination with column pivoting) has the most complex graph. Figure 1 shows the simplified graph of this part, post factum the choice of pivots. The operations of finding the maxima in the columns and saving the corresponding positions are given in green. The inversion of the current pivot, multiplications in the current column, and operations of the type [math]a b + c[/math] in the other columns are depicted in yellow, brown, and purple, respectively. Figure 2 shows the graph of a layer (one step of the method). The notation is the same as in the full graph. Dotted lines denote data communication from the pivot row.

The second part consists of almost identical fragments. The first fragment describes the forward substitution, namely, the solution of the SLAE with a lower triangular matrix [math]L \vec{y} = \vec{b}[/math] (Fig.3). The diagonal entries of this matrix are ones; consequently, this fragment contains no divisions. All the green nodes correspond to the operations of the type [math]a b + c[/math]. The second fragment describes the back substitution, namely, the solution of the SLAE with an upper triangular matrix [math]U \vec{x} = \vec{y}[/math] (Fig.4). This matrix has no specific features; hence, the associated graph contains division operations, which are shown in yellow.

1.8 Parallelization resource of the algorithm

The analysis of parallelization resource of the algorithm shows that Gaussian elimination with column pivoting has the highest, namely, quadratic complexity (exactly because of the pivoting). The other parts of the algorithm have at most linear complexity. Therefore, to optimize calculations, the virtual researcher must optimize Gaussian elimination with column pivoting. Besides, the first fragment of the second part (that is, the solution of the first triangular SLAE) can be attached to the first part and executed almost entirely in the background of the latter. Indeed, both have the same direction of recalculating the data. However, the rest of the second part, despite its similarity to the first fragment, can only be executed on completion the latter. The reason is that these two fragments have opposite directions of recalculating the data.

1.9 Input and output data of the algorithm

Actually, this test has always the same data, which are calculated in the test itself. Consequently, in the strict sense, it has no input data.

The output data, produced by the test, are the norms of the residual, solution, and matrix, the ratios of these norms, and the computational power of the target computer system.

1.10 Properties of the algorithm

2 Software implementation of the algorithm

2.1 Implementation peculiarities of the serial algorithm

Strictly speaking, the investigator implementing the test for a computer system tests at the same time LINPAC or any other package from which procedures for triangular decomposition and solving a SLAE with the help of this decomposition are called. The web versions of the test can also be used for testing other implementations of the Gauss method. It was already noted above that the triangular decomposition of the original matrix is calculated in-place, and, in the course of forward and back substitution, the solutions are written to the locations of right-hand sides. This causes the necessity to regenerate both the coefficient matrix and the right-hand side for the subsequent calculation of the residual. This organization of the algorithm may well be changed in order to optimize the test.