Boruvka's algorithm

| Алгоритм Борувки | |

| Sequential algorithm | |

| Serial complexity | [math]O(|E|ln(|V|))[/math] |

| Input data | [math]O(|V| + |E|)[/math] |

| Output data | [math]O(|V|)[/math] |

| Parallel algorithm | |

| Parallel form height | [math]max O(ln(|V|)) [/math] |

| Parallel form width | [math]O(|E|)[/math] |

Contents

- 1 Properties and structure of the algorithm

- 1.1 General description of the algorithm

- 1.2 Mathematical description of the algorithm

- 1.3 Computational kernel of the algorithm

- 1.4 Macro structure of the algorithm

- 1.5 Implementation scheme of the serial algorithm

- 1.6 Serial complexity of the algorithm

- 1.7 Information graph

- 1.8 Parallelization resource of the algorithm

- 1.9 Input and output data of the algorithm

- 1.10 Properties of the algorithm

- 2 Software implementation of the algorithm

- 2.1 Implementation peculiarities of the serial algorithm

- 2.2 Locality of data and computations

- 2.3 Possible methods and considerations for parallel implementation of the algorithm

- 2.4 Scalability of the algorithm and its implementations

- 2.5 Dynamic characteristics and efficiency of the algorithm implementation

- 2.6 Conclusions for different classes of computer architecture

- 2.7 Existing implementations of the algorithm

- 3 References

1 Properties and structure of the algorithm

1.1 General description of the algorithm

The Borůvka algorithm[1][2] was designed for constructing the minimum spanning tree in a weighted undirected graph. It is well parallelizable and is a foundation of the distributed GHS algorithm.

1.2 Mathematical description of the algorithm

Let [math]G = (V, E)[/math] be a connected undirected graph with the edge weights [math]f(e)[/math]. It is assumed that all the edges have distinct weights (otherwise, the edges can be sorted first by weight and then by index).

The Borůvka's algorithm is based on the following two facts:

- Minimum edge of a fragment. Let [math]F[/math] be a fragment of the minimum spanning tree, and let [math]e_F[/math] be an edge with the least weight outgoing from [math]F[/math] (that is, exactly one of its ends is a vertex in [math]F[/math]). If such an edge [math]e_F[/math] is unique, then it belongs to the minimum spanning tree.

- Merging fragments. Let [math]F[/math] be a fragment of the minimum spanning tree of [math]G[/math], while the graph [math]G'[/math] is obtained from [math]G[/math] by merging the vertices that belong to [math]F[/math]. Then the union of [math]F[/math] and the minimum spanning tree of [math]G'[/math] yields the minimum spanning tree of the original graph [math]G[/math].

At the start of the algorithm, each vertex of [math]G[/math] is a separate fragment. At the current step, the outgoing edge with the least weight (if such an edge exists) is chosen for each fragment. The chosen edges are added to the minimum spanning tree, and the corresponding fragments are merged.

1.3 Computational kernel of the algorithm

The basic operations of the algorithm are:

- Search for the outgoing edge with the least weight in each fragment.

- Merging fragments.

1.4 Macro structure of the algorithm

For a given connected undirected graph, the problem is to find the tree that connects all the vertices and has the minimum total weight.

The classical example (taken from Borůvka's paper) is to design the cheapest electrical network if the price for each piece of electric line is known.

Let [math]G=(V,E)[/math] be a connected graph with the vertices [math]V = ( v_{1}, v_{2}, ..., v_{n} )[/math] and the edges [math]E = ( e_{1}, e_{2}, ..., e_{m} )[/math]. Each edge [math]e \in E[/math] is assigned the weight [math]w(e)[/math].

It is required to construct the tree [math]T^* \subseteq E[/math] that connects all the vertices and has the least possible weight among all such trees:

[math] w(T^* )= \min_T( w(T)) [/math].

The weight of a set of edges is the sum of their weights:

[math]w(T)=\sum_{e \in T} (w(T))[/math]

If [math]G[/math] is not connected, then there is no tree connecting all of its vertices.

In this case, it is required to find the minimum spanning tree for each connected component of [math]G[/math]. The collection of such trees is called the minimum spanning forest (abbreviated as MSF).

1.4.1 Auxiliary algorithm: system of disjoint sets (Union-Find)

Every algorithm for solving this problem must be able to decide which of the already constructed fragments contains a given vertex of the graph. To this end, the data structure called a «system of disjoint sets» (Union-Find) is used. This structure supports the following two operations:

1. [math]FIND(v) = w[/math] – for a given vertex v, returns the vertex w, which is the root of the fragment that contains v. It is guaranteed that u and v belong to the same fragment if and only if [math]FIND(u) = FIND(v)[/math].

2. [math]MERGE(u, v)[/math] – combines two fragments that contain the vertices [math]u[/math] and [math]v.[/math] (If these vertices already belong to the same fragment, then nothing happens.) It is convenient that, in a practical implementation, this operation would return the value "true" if the fragments were combined and the value "false," otherwise.

1.4.2 Последовательная версия

The classical serial algorithm Union-Find is described in a Tarjan's paper. Each vertex v is assigned the indicator to the parent vertex [math]parent(v)[/math].

1. At first, [math]parent(v) := v[/math] for all the vertices.

2. [math]FIND(v)[/math] is executed as follows: set [math]u := v[/math]; then follow the indicators [math]u := parent(u)[/math] until the relation [math]u = parent(u)[/math] is obtained. This is the result of the operation. An additional option is the merging of tree: for all the visited vertices, set [math]parent(u_i) := u[/math] or perform the merging operation along the way: [math]parent(u) := parent(parent(u)))[/math].

3. [math]MERGE(u, v)[/math] is executed as follows: first, find the root vertices [math]u := FIND(u), v := FIND(v)[/math]. If [math]u = v[/math], then the original vertices belong to the same fragment and no merging occurs. Otherwise, set either [math]parent(u) := v[/math] or [math]parent(v) := u[/math]. In addition, one can keep track of the number of vertices in each fragment in order to add the smaller fragment to the greater one rather than otherwise. (Complexity estimates are derived exactly for such an implementation; however, in practice, the algorithm performs well even without counting the number of vertices.)

1.5 Implementation scheme of the serial algorithm

In Borůvka's algorithm, the fragments of the minimum spanning tree are build up gradually by joining minimum edges outgoing from each fragment.

1. At the start of the algorithm, each vertex is a separate fragment.

2. At each step:

- For each fragment, the outgoing edge with the minimum weight is determined.

- Minimum edges are added to the minimum spanning tree, and the corresponding fragments are combined.

3. The algorithm terminates when only one fragment is left or no fragment has outgoing edges.

The search for minimum outgoing edges can be performed independently for each fragment. Therefore, this stage of computations can be efficiently parallelized (including the use of the mass parallelism of graphic accelerators).

The merging of fragments can also be implemented in parallel by using the parallel version of the algorithm Union-Find, which was described above.

An accurate count of the number of active fragments permits to terminate Borůvka's algorithm at one step earlier compared to the above description:

1. At the start of the algorithm, the counter of active fragments is set to zero.

2. At the stage of search for minimum edges, the counter is increased by one for each fragment that has outgoing edges.

3. At the stage of combining fragments, the counter is decreased by one each time when the operation [math]MERGE(u, v)[/math] returns the value "true".

If, at the end of an iteration, the value of the counter is 0 or 1, then the algorithm stops. Parallel processing is possible at the stage of sorting edges by weight; however, the basic part of the algorithm is serial.

1.6 Serial complexity of the algorithm

The serial complexity of Borůvka's algorithm for a graph with [math]|V|[/math] vertices and [math]|E|[/math] edges is [math]O(|E| \ln(|V|))[/math] operations.

1.7 Information graph

There are two levels of parallelism in the above description: the parallelism in the classical Borůvka's algorithm (lower level) and the parallelism in the processing of a graph that does not fit in the memory.

Lower level of parallelism: search for minimum outgoing edges can be performed independently for each fragment, which permits to efficiently parallelize (both on GPU and CPU) this stage of the process. The merging of fragments can also be executed in parallel with the use of the above algorithm Union-Find.

Upper level of parallelism: constructions of separate minimum spanning trees for each edge list can be performed in parallel. For instance, the overall list of edges can be partitioned into two parts of which one is processed on GPU, while the other is in parallel processed on CPU.

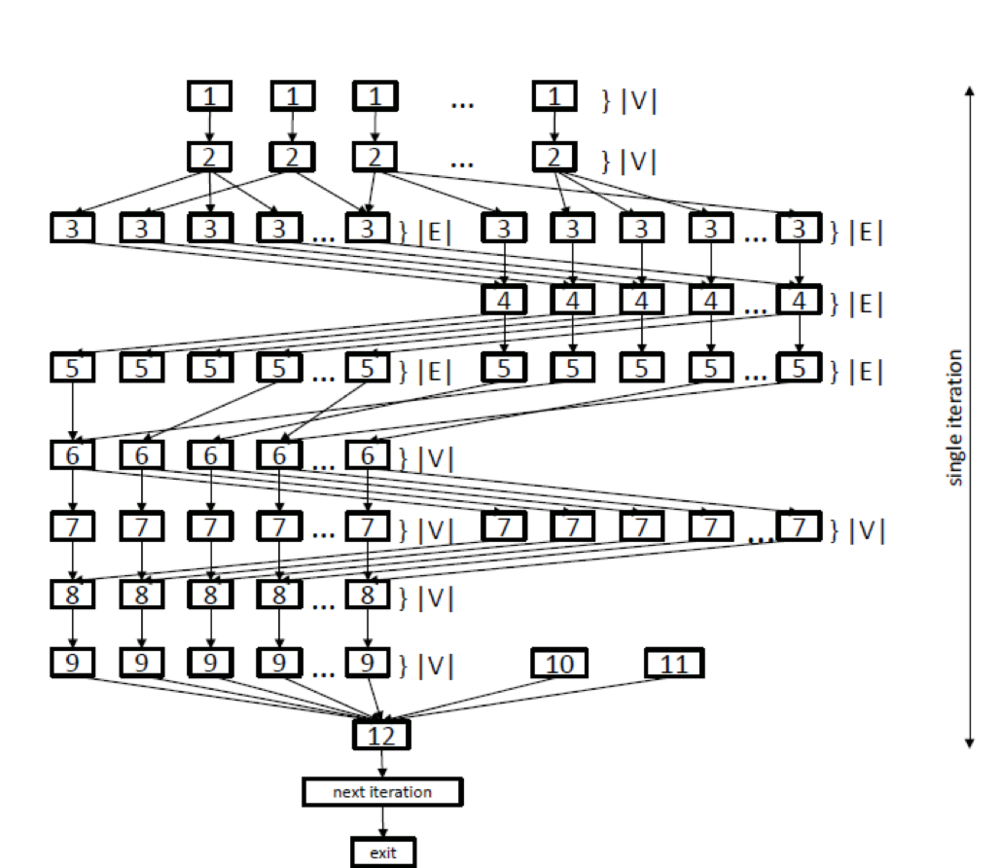

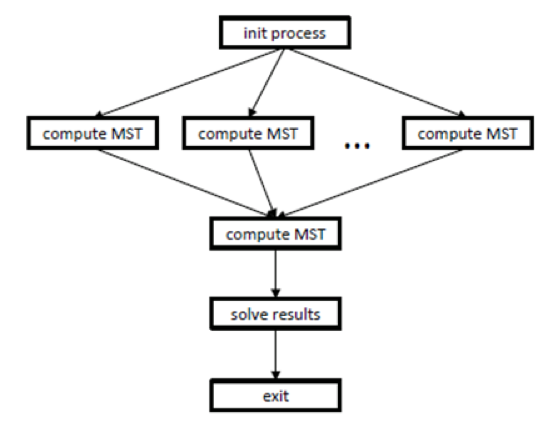

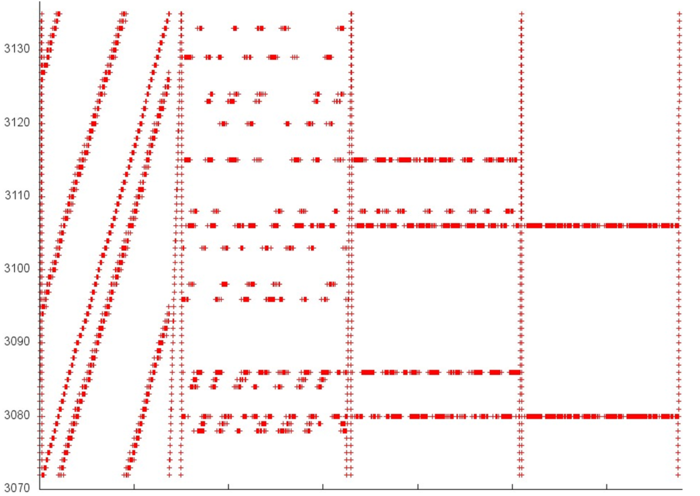

Consider the information graphs and their detailed descriptions. One can think that figure 1 shows the information graph of the classical Borůvka's algorithm, while figure 2 shows the information graph of the processing algorithm.

In the graph shown in figure 1, the lower level of parallelism is represented by levels {3, 4, 5}, which correspond to parallel search operations for minimum outgoing edges, and by levels {6, 7, 8}, which correspond to parallel operations of merging trees. Various copying operations {1, 2, 8, 9} are also performed in parallel. After the body of the loop has been executed, test {12} verifies how many trees are left at the current step. If this number was not changed, the loop terminates; otherwise, the algorithm passes to the next iteration.

As already said, the upper level of parallelism, illustrated by figure 2, refers to the parallel computation of minimum spanning trees (operation "compute mst") for different parts of the original graph. Prior to this computation, the initialization process ("init process") is performed, and its data are used by the subsequent parallel operations "compute mst". After these parallel computations, the ultimate spanning tree is calculated, and the result obtained is saved to memory ("save results").

1.8 Parallelization resource of the algorithm

Thus, Borůvka's algorithm has two levels of parallelism.

At the upper level, the minimum spanning trees may be searched for separate parts of the list of graph edges (parallel operations "compute_MST" in figure 2). However, then the final union должно последовать финальное объединение полученных ребер и вычисление минимального остовного дерева для полученного графа, которое будет производиться последовательно.

Besides, the computation of each minimum spanning tree (parallel operations "compute_MST" in figure 2) has an intrinsic resource of parallelism discussed below. The operations of initialization and copying data (see [1], [2], and [9] in figure 1) can be performed in parallel in [math]O(|V|)[/math] steps. The operations of searching for minimum outgoing edges (see [3],[4], and [5]) can also be executed in parallel. при том для каждой дуги и обратной к ней независимо, что даёт [math]2*O(|E|)[/math] параллельных операций. Помимо этого, операции объединения деревьев [6], [7], [8] могут так же производиться параллельно за [math]O(|V|)[/math] операций.

As a result, the width of the parallel form of the classical Borůvka's algorithm is [math]O(|E|)[/math]. The height of the parallel form depends on the number of steps in the algorithm and is bounded above by [math]O(ln(|V|))[/math].

1.9 Input and output data of the algorithm

Input data: weighted graph [math](V, E, W)[/math] ([math]|V|[/math] vertices [math]v_i[/math] and [math]|E|[/math] edges [math]e_j = (v^{(1)}_{j}, v^{(2)}_{j})[/math] with weights [math]f_j[/math]).

Size of the input data: [math]O(|V| + |E|)[/math].

Output data: the list of edges of the minimum spanning tree (for a disconnected graph, the list of minimum spanning trees for all connected components).

Size of the output data: [math]O(|V|)[/math].

1.10 Properties of the algorithm

- The algorithm terminates in a finite number of steps because, at each step, the number of fragments reduces by at least one.

- Moreover, the number of fragments at least halves at each step; consequently, the total number of steps is at most [math]\log_2 n[/math]. This implies an estimate for the complexity of the algorithm.

2 Software implementation of the algorithm

2.1 Implementation peculiarities of the serial algorithm

2.2 Locality of data and computations

The memory accesses at the stage of searching for the minimum edge are as follows:

- Reading information on edges (can be carried out in a serial mode).

- Testing an edge for the belonging to a fragment (two reads from the array

[math]parent(u)[/math] with a probable cache miss).

3. Reading and updating the minimum weight of a fragment edge. This information can be cached, which especially makes sense at the later steps. However, the updating must be done atomically, which requires a cache invalidation.

At the stage of merging fragments, it is required to atomically update the array [math]parent(u)[/math] for each edge added to a minimum spanning tree. For some implementations of the parallel structure Union-Find, the roots of fragments can be located near the beginning of this array, which permits to cache this (most often readable) area. However, the requirement of atomicity restricts the effect of such a caching.

2.2.1 Locality of implementation

2.2.1.1 Structure of memory access and a qualitative estimation of locality

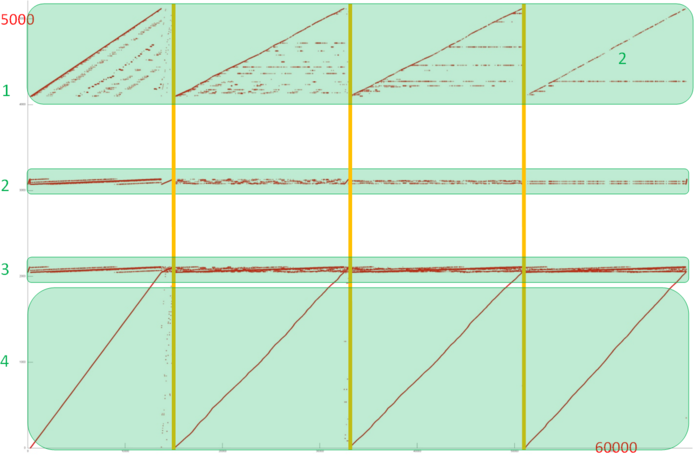

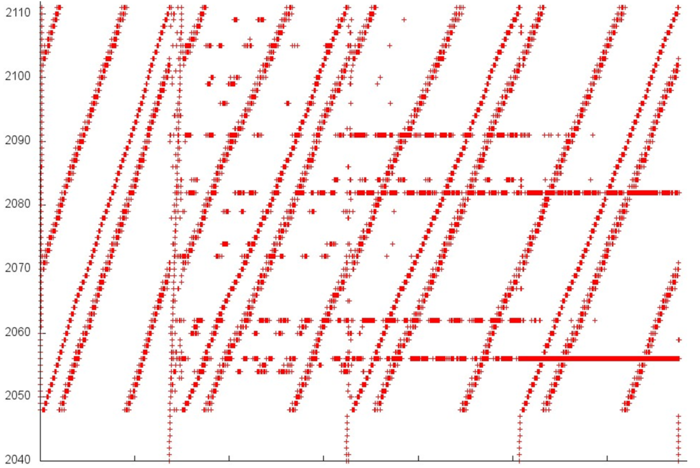

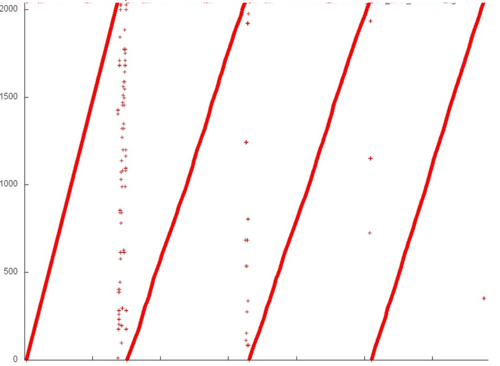

Fig.3 shows the memory access profile for an implementation of Borůvka's algorithm. Similarly to most of the graph algorithms, this one has an irregular structure. Note right away that, for implementations of such algorithms, the locality depends in many ways on the structure of the input graph and may change significantly. Here, we consider only one of the possible variants.

One can see that the overall profile consists of four rather similar stages (which are separated by vertical lines in fig.3). Since the structure of this profile is irregular, it is better to examine all the stages.

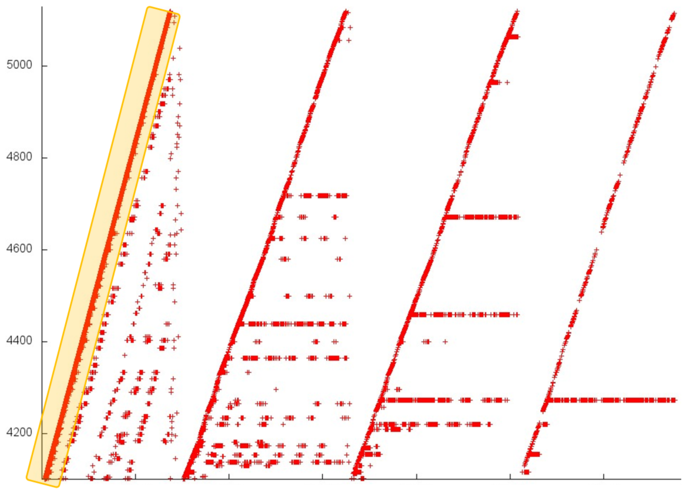

We begin with the analysis of the upper part of the profile (fragment 1 in fig.3). It is shown in fig.4. At each stage, the major portion of memory accesses are successive searches through the elements of this fragment (set out in yellow in fig.4). Other accesses are structured variously at different stages. At the first stage, the accesses are scattered rather far from each other, which implies a low locality (both spatial and temporal). By contrast, at the last stage, almost all accesses (off the successive search) are performed to one and the same element, which, naturally, ensures a very high locality. Such a structure of the entire fragment most likely results in moderate values of both the spatial and temporal locality.

Now, we turn to the analysis of fragment 2 (fig.5). Here, one can see that all the four stages differ widely in structure. Similarly to the situation with fragment 1, each next stage has a higher locality, but, here, this feature is more evident. Note that this fragment involves only about 60 elements, whereas these elements are accessed fairly often. Consequently, the locality in this case is high.

On the whole, a similar picture is observed in fragment 3. In fig.6, one can see four stages with a resembling structure, and, again, about 60 elements are involved. This allows us to assert the high locality of this fragment.

A separate examination of fragment 4 (see fig.7) allows one to see that the locality is determined here by four successive searches through all the elements of this fragment. These searches have the conventional structure: step through memory equal to 1, and a sole access to each element. A small distortion of the searches is caused by an irregular activity in other fragments, which results in the contortion of the visual representation of the profile. Such a set of accesses has a high spatial locality but a low temporal one.

Thus, fragments 2 and 4 are characterized by a high locality, while the other two fragments have a modest locality. Since the majority of accesses are exactly those to fragments 2 and 3, it seems likely that the overall locality is rather high.

2.2.1.2 Quantitative estimation of locality

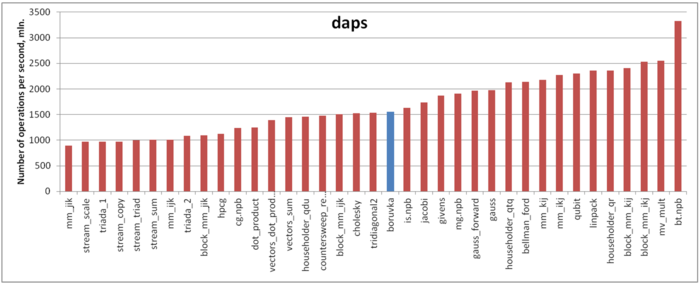

Our estimate is based on daps, which assesses the number of memory accesses (reads and writes) per second. Similarly to flops, daps is used to evaluate memory access performance rather than locality. Yet, it is a good source of information, particularly for comparison with the results provided by the estimate cvg.

Fig.8 shows daps values for implementations of popular algorithms, sorted in ascending order (the higher the daps, the better the performance in general). One can see that the memory access performance of this implementation is not bad. In particular, its daps value is, for instance, comparable with that for the implementation of the Cholesky method. However, it is considerably smaller than those for the most productive implementations (for example, one of the Linpack benchmark). On the whole, this is not surprising in the case of graph algorithms with their tradition of the inefficient use of memory.

2.3 Possible methods and considerations for parallel implementation of the algorithm

A program implementing Borůvka's algorithm consists of two parts:

1. the part that is responsible for the general coordination of computations;

2. the part that is responsible for the parallel computations on a multi-core CPU or GPU.

The serial algorithm described above cannot be used in a parallel program: in an implementation of [math]MERGE[/math], the results of operations [math]FIND(u)[/math] and [math]FIND(v)[/math] may permanently vary, which results in a race condition. A parallel variant of the algorithm is described in paper

1. Each vertex v is assigned the record [math]A[v] = { parent, rank }[/math]. At first, [math]A[v] := { v, 0 }[/math].

2. The auxiliary operation [math]UPDATE(v, rank_v, u, rank_u)[/math]:

old := A[v]

if old.parent != v or old.rank != rank_v then return false

new := { u, rank_u }

return CAS(A[v], old, new)

3. The operation [math]FIND(v)[/math]:

while v != A[v].parent do

u := A[v].parent

CAS(A[v].parent, u, A[u].parent)

v := A[u].parent

return v

4. The operation UNION(u, v):

while true do

(u, v) := (FIND(u), FIND(v))

if u = v then return false

(rank_u, rank_v) := (A[u].rank, A[v].rank)

if (A[u].rank, u) > (A[v].rank, v) then

swap((u, rank_u), (v, rank_v))

if UPDATE(u, rank_u, v, rank_u) then

if rank_u = rank_v then

UPDATE(v, rank_v, v, rank_v + 1)

return true

This variant of the algorithm is guaranteed to have the "wait-free" property. In practice, one may use a simplified version with no count of ranks. It has a weaker "lock-free" property but, in a number of cases, wins in speed.

2.4 Scalability of the algorithm and its implementations

2.4.1 Scalability of the algorithm

The possibility of processing fragments independently of each other implies a good scalability of the algorithm. The restraining factors are:

- memory bandwidth while reading information on the graph;

- rivalry of data streams while performing atomic operations with memory;

- barrier synchronization after each half step of the algorithm.

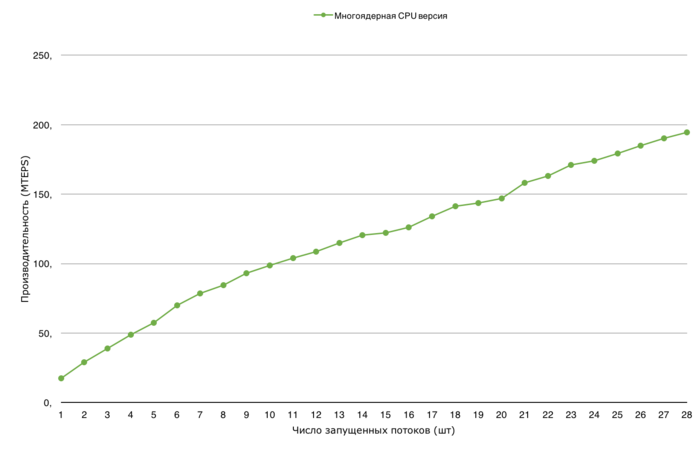

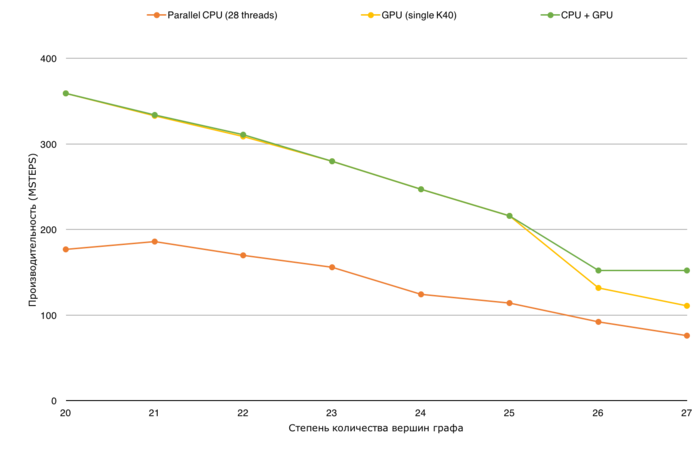

2.4.2 Scalability of the algorithm implementation

Let us study scalability for the parallel implementation of Borůvka's algorithm in accordance with Scalability methodology. This study was conducted using the Lomonosov-2 supercomputer of the Moscow University Supercomputing Center.

Variable parameters for the start-up of the algorithm implementation and the limits of parameter variations:

- number of processors [1 : 28] with the step 1;

- graph size [2^20 : 2^27].

We perform separate analyses of strong scalability and scaling-out of the implementation of Borůvka's algorithm.

The performance is defined as TEPS (an abbreviation for Traversed Edges Per Second), that is, the number of graph arcs processed by the algorithm in a second. Using this characteristic, one can compare the performance for graphs of different sizes and estimate how the performance gets worse when the graph size increases.

2.5 Dynamic characteristics and efficiency of the algorithm implementation

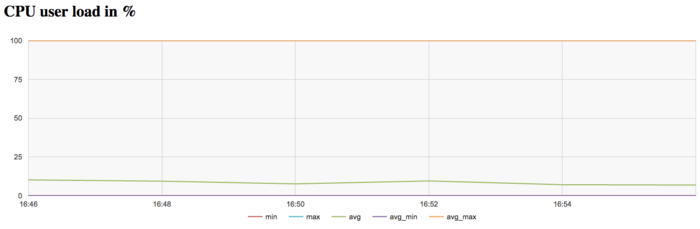

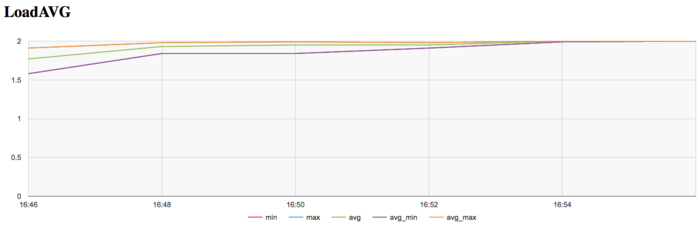

The experiments were conducted with Borůvka's algorithm implemented for CPU. All the results were obtained with the «Lomonosov-2» supercomputer. We used Intel Xeon E5-2697v3 processors. The problem was solved for a large graph (of size 2^27) on a single node. Only one iteration was performed. The figures below illustrate the efficiency of this implementation.

The graph of CPU loading shows that the processor is idle almost all the time: the average level of loading is about 10 percent. This is an ordinary result for programs launched with the use of only one core.

The graph of the number of processes expecting the beginning of the calculation stage (Loadavg) shows that the value of this parameter in the course of the program execution is always close to 2. This indicates that the hardware resources are all the time loaded by at most two processes. Such a small number points to a not very reasonable use of resources.

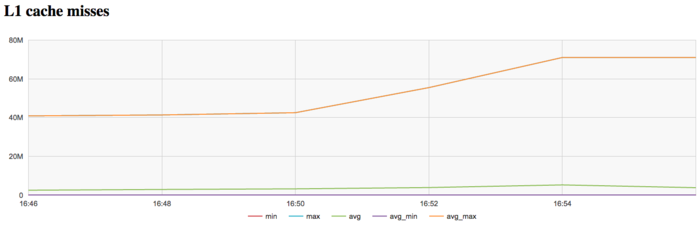

The graph of L1 cache misses shows that the number of misses is very large (on the level of 40 millions per second). It is interesting that this number increases to the level of 70 millions per second to the end of iteration.

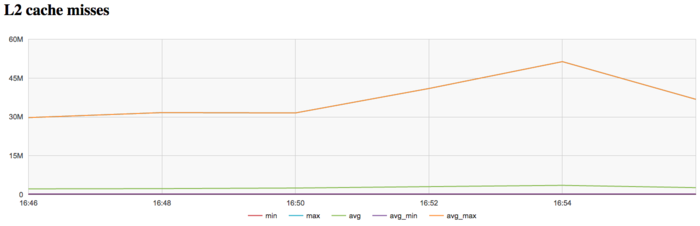

The graph of L2 cache misses shows that the number of such misses is also very large (on the level of 30 millions per second). This number increases to the end of iteration (to the level of 50 millions per second). Such an increase is more evident here than in figure 13.

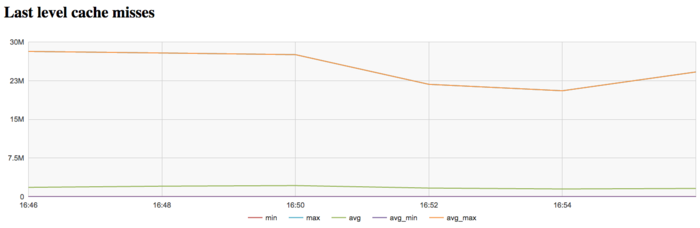

The graph of L3 cache misses shows that the number of these misses is again large; it is about 30 millions per second. This indicates that the problem fits very badly into cache memory, and the program is compelled to work all the time with RAM, which is explained by the very large size of the input graph.

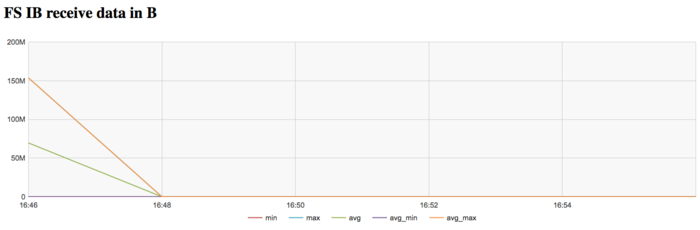

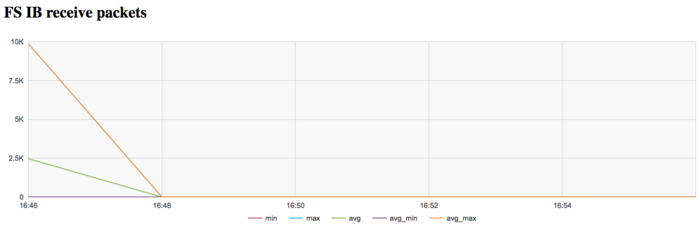

The graph of the data rate through Infiniband network shows a fairly high intensity of using this network at the first stage. This is explained by the program logic, which assumes the reading of the graph from a disc file. On Lomonosov-2, communications with this disc are performed through a dedicated Infiniband network.

The graph of the data rate measured in packets per second demonstrates a similar picture of very high intensity at the first stage of executing the problem. Later on, the network is almost not used.

On the whole, the data of system monitoring make it possible to conclude that the program worked with a stable intensity. However, it used the memory very inefficiently due to the extremely large size of the graph.

2.6 Conclusions for different classes of computer architecture

2.7 Existing implementations of the algorithm

- C++, MPI: Parallel Boost Graph Library; functions

dense_boruvka_minimum_spanning_tree,boruvka_then_merge,boruvka_mixed_mergecombine Borůvka's algorithm and Kruskal algorithm. - C++, MPI: Parallel Boost Graph Library

- RCC for CPU

- RCC for GPU