Difference between revisions of "Forward substitution"

| [quality revision] | [checked revision] |

| (4 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | Primary authors of this description: [[:ru:Участник:Frolov|A.V.Frolov]] | + | {{algorithm |

| + | | name = Forward substitution | ||

| + | | serial_complexity = <math>O(n^2)</math> | ||

| + | | input_data = <math>O(n^2)</math> | ||

| + | | output_data = <math>n</math> | ||

| + | | pf_height = <math>O(n)</math> | ||

| + | | pf_width = <math>O(n)</math> | ||

| + | }} | ||

| + | Primary authors of this description: [[:ru:Участник:Frolov|A.V.Frolov]]. | ||

== Properties and structure of the algorithm == | == Properties and structure of the algorithm == | ||

| Line 146: | Line 154: | ||

</source> | </source> | ||

| − | In this case, the <math>S</math> variable must be | + | In this case, the <math>S</math> variable must be double precision to implement the accumulation mode. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Possible methods and considerations for parallel implementation of the algorithm === | === Possible methods and considerations for parallel implementation of the algorithm === | ||

| − | === | + | === Run results === |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Conclusions for different classes of computer architecture === | === Conclusions for different classes of computer architecture === | ||

| − | == | + | == References == |

[[Ru:Прямая подстановка (вещественный вариант)]] | [[Ru:Прямая подстановка (вещественный вариант)]] | ||

[[Category:Articles in progress]] | [[Category:Articles in progress]] | ||

Latest revision as of 09:48, 18 July 2022

| Forward substitution | |

| Sequential algorithm | |

| Serial complexity | [math]O(n^2)[/math] |

| Input data | [math]O(n^2)[/math] |

| Output data | [math]n[/math] |

| Parallel algorithm | |

| Parallel form height | [math]O(n)[/math] |

| Parallel form width | [math]O(n)[/math] |

Primary authors of this description: A.V.Frolov.

Contents

- 1 Properties and structure of the algorithm

- 1.1 General description of the algorithm

- 1.2 Mathematical description of the algorithm

- 1.3 Computational kernel of the algorithm

- 1.4 Macro structure of the algorithm

- 1.5 Implementation scheme of the serial algorithm

- 1.6 Serial complexity of the algorithm

- 1.7 Information graph

- 1.8 Parallelization resource of the algorithm

- 1.9 Input and output data of the algorithm

- 1.10 Properties of the algorithm

- 2 Software implementation of the algorithm

- 3 References

1 Properties and structure of the algorithm

1.1 General description of the algorithm

Forward substitution is the process of solving a system of linear algebraic equations (SLAE) [math]Lx = y[/math] with a lower triangular coefficient matrix [math]L[/math]. The matrix [math]L[/math] is a factor of the matrix [math]A[/math] and results from either the [math]LU[/math]-decomposition of the latter obtained by any of numerous ways (such as simple Gaussian elimination or Gaussian elimination with pivoting or compact schemes of Gaussian elimination) or other types of decomposition. The triangular form of [math]L[/math] ensures that the process of solving a SLAE is a modification of the general substitution method and this process can be described by simple formulas.

In[1], the process of solving a SLAE with a lower triangular coefficient matrix was named the back substitution. It was also noted in [1] that, in the literature, back substitution is usually regarded as solving a SLAE with a right triangular matrix, whereas the solution of left triangular systems is called the forward substitution. We adopt this nomenclature in order to avoid using identical names for different algorithms. Furthermore, unlike the forward substitution treated here, the back substitution, as presented in this algorithmic encyclopedia, is at the same time one of the stages in Gaussian elimination for solving SLAEs, namely, the final stage of this method.

Nevertheless, the structure of the forward substitution for a nonsingular left triangular matrix is virtually identical to the structure of the back substitution. Here, we consider the case where [math]L[/math] is a matrix obtained from a Gauss-like decomposition and, hence, [math]L[/math] has unit diagonal entries.

1.2 Mathematical description of the algorithm

Input data: left triangular matrix [math]L[/math] (with entries [math]l_{ij}[/math]), right-hand side vector [math]b[/math] (with components [math]b_{i}[/math]).

Output data: solution vector [math]y[/math] (with components [math]y_{i}[/math]).

Formulas of the method:

- [math] \begin{align} y_{1} & = b_{1} \\ y_{i} & = b_{i} - \sum_{j = 1}^{i-1} l_{ij} y_{j}, \quad i \in [2, n]. \end{align} [/math]

There exists also a block version of the method; however, this description treats only the pointwise version.

1.3 Computational kernel of the algorithm

The computational kernel of the forward substitution could be compiled of repeated dot products of the rows of [math]L[/math] and the already determined portions of the vector [math]y[/math] (there are on the whole [math]n-1[/math] products) :

- [math] \sum_{j = 1}^{i-1} l_{ij} y_{j} [/math]

Depending on the problem requirements, these products are calculated with or without using the accumulation mode. Then they are subtracted from the corresponding components of the vector [math]b[/math], and the results are divided by the corresponding diagonal entries of [math]L[/math]. This scheme is, however, not used in domestic implementations, even in serial ones. In these implementations, sums of the type

- [math] b_{i} - \sum_{j = 1}^{i-1} l_{ij} y_{j} [/math]

(and dot products are exactly such sums) are not calculated according to the rule "first, find the dot product and then subtract it from the given element". Instead, the componentwise products, which are summands of the dot product, are one by one subtracted from this element. Consequently, the calculation of the expressions

- [math] b_{i} - \sum_{j = 1}^{i-1} l_{ij} y_{j} [/math]

rather than dot products should be regarded as the computational kernel of the forward substitution. Depending on the problem requirements, these expressions are calculated with or without using the accumulation mode.

1.4 Macro structure of the algorithm

As already noted in the description of the computational kernel, the basic part of the forward substitution is compiled of repeated calculations of the sums

- [math] b_{i} - \sum_{j = 1}^{i-1} l_{ij} y_{j} [/math]

(there are on the whole [math]n-1[/math] sums). These calculations are performed with or without using the accumulation mode.

1.5 Implementation scheme of the serial algorithm

To make the order of calculations clearer, rewrite the formulas of the method as follows.

1. [math]y_{1} = b_{1}[/math]

Then, for [math]i[/math] increasing from [math]2[/math] to [math]n[/math], do

2. [math]y_{i} = b_{i} - \sum_{j = 1}^{i-1} l_{ij} y_{j}[/math]

We emphasize that sums of the form [math]b_{i} - \sum_{j = 1}^{i-1} l_{ij} y_{j}[/math] are calculated in the accumulation mode by subtracting from [math]b_{i}[/math] the products [math]l_{ij} y_{j}[/math]for [math]j[/math] increasing from [math]1[/math] to [math]i-1[/math]. Any other order of summation makes the parallel properties of the algorithm much worse.

1.6 Serial complexity of the algorithm

The forward substitution of order n in its serial (that is, the fastest) version requires

- [math]\frac{n^2-n}{2}[/math] multiplications and the same number of additions (subtractions).

The use of accumulation requires that multiplications and subtractions be done in the double precision mode (or such procedures as the Fortran function DPROD be used). This increases the computation time for performing the forward substitution.

In terms of serial complexity, the forward substitution is qualified as a quadratic complexity algorithm.

1.7 Information graph

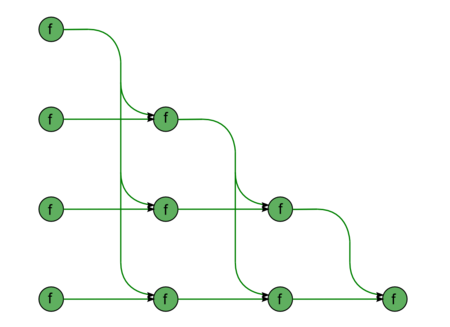

We describe the graph of the algorithm both analytically and graphically.

The graph of forward substitution consists of a single group of vertices placed at integer nodes of a two-dimensional domain. The corresponding operation is [math]a-bc[/math].

The natural coordinates of this domain are as follows:

- [math]i[/math] changes from [math]2[/math] to [math]n[/math], taking all the integer values in this range;

- [math]j[/math] changes from [math]1[/math] to [math]i-1[/math], taking all the integer values in this range.

The arguments of the operation are as follows:

- [math]a[/math] is:

- the element [math]b_{i}[/math] of the input data if [math]j = 1[/math];

- the result of performing the operation corresponding to the vertex with coordinates [math]i, j-1[/math] if [math]j \gt 1[/math];

- [math]b[/math] is the element [math]l_{ij}[/math] of the input data;

- [math]c[/math] is:

- the element [math]b_{1}[/math] of the input data if [math]j = 1[/math];

- the result of performing the operation corresponding to the vertex with coordinates [math]j, j-1[/math] if [math]j \gt 1[/math].

The result of performing the operation is:

- an intermediate data item if [math]j \lt i-1[/math] ;

- an output data item if [math]j = i-1[/math] .

The graph described can be seen in the figure corresponding to the case [math]n = 5[/math]. The vertices are depicted in green. The supplies of the input data from the vector [math]b[/math] to the vertices of the left column and from the matrix [math]L[/math] to all the vertices are not shown in the figure.

1.8 Parallelization resource of the algorithm

The parallel version of the forward substitution of order n requires that the following layers be successively performed:

- [math]n - 1[/math] layers involving multiplications and additions / subtractions. (The number of operations changes linearly from [math]1[/math] to [math]n-1[/math].)

The use of accumulation requires that multiplications and subtractions be done in the double precision mode. For the parallel version, this implies that virtually all the intermediate calculations in the algorithm must be performed in double precision if the accumulation option is used. Unlike the situation with the serial version, this results in a certain increase in the required memory size.

In terms of the parallel form height, the forward substitution is qualified as a linear complexity algorithm. Its complexity is also linear in terms of the parallel form width.

1.9 Input and output data of the algorithm

Input data: left triangular matrix [math]L[/math] (with entries [math]l_{ij}[/math]), right-hand side vector [math]b[/math] (with components [math]b_{i}[/math]).

Size of the input data : [math]\frac{n (n + 1)}{2}[/math] (since [math]L[/math] is triangular and has unit diagonal entries, it suffices that only its subdiagonal entries be stored).

Output data : solution vector [math]y[/math] (with components [math]y_{i}[/math]).

Size of the output data: [math]n~.[/math]

1.10 Properties of the algorithm

It is clear that, in the case of unlimited resources, the ratio of the serial to parallel complexity is linear (since this is the ratio of the quadratic to linear complexity).

The computational power of the forward substitution, understood as the ratio of the number of operations to the total size of the input and output data, is only a constant.

In this version, the algorithm of forward substitution is completely determined. We do not consider any other order of performing operations because, otherwise, the structure of the algorithm would radically change and the parallel complexity would be quadratic.

2 Software implementation of the algorithm

2.1 Implementation peculiarities of the serial algorithm

In its simplest form, the forward substitution algorithm in Fortran can be written as follows:

Y(1) = B(1)

DO I = 2, N-1

S = B(I)

DO J = 1, I-1

S = S - DPROD(L(I,J), Y(J))

END DO

END DO

In this case, the [math]S[/math] variable must be double precision to implement the accumulation mode.

2.2 Possible methods and considerations for parallel implementation of the algorithm

2.3 Run results

2.4 Conclusions for different classes of computer architecture

3 References

- ↑ Voevodin V.V., Kuznetsov Yu.A. Matrices and computations. Moscow, Nauka Publ., 1984. 320 p. (in russian)