Two-sided Thomas, locality

Primary author of this description: Vad.V.Voevodin (Section 2), A.M.Teplov (Section 3).

Contents

1 Links

The basic fragment of the implementation used for obtaining quantitative estimates is given here (the Kernel function).

2 Locality of data and computations

One can see from the algorithm graph that the spatial locality of the data is fair. Indeed, all the arguments needed for an operation are calculated "nearby". However, the temporal locality is not as good. Suppose that the cache cannot hold all the problem data. Then repeated cache misses will occur while performing calculations in the "upper left" and "lower right" corners of a SLAE. Thus, one of the recommendations to application engineers using the two-sided elimination method is as follows: Arrange the calculations so that all the elimination paths are sufficiently short and their data can be placed in the cache.

The two-sided elimination method is an example of a serial algorithm with the locality of computations so high that it even becomes excessive when the two basic branches are implemented separately by different cores or serially one after the other [1]. Computational cores of processors virtually cannot use their superscalar capabilities because the data needed by the basic operations of this method are produced by immediately preceding operations. This sharply deteriorates the performance of the method even for modern single-processor and single-core systems. That is why, in the above subroutine, both branches (of the forward, as well as the backward, elimination path) are brought together into a single loop. However, since the efficiency remains low, the only advantage is attained over the exceedingly local monotone elimination.

2.1 Locality of implementation

2.1.1 Structure of memory access and a qualitative estimation of locality

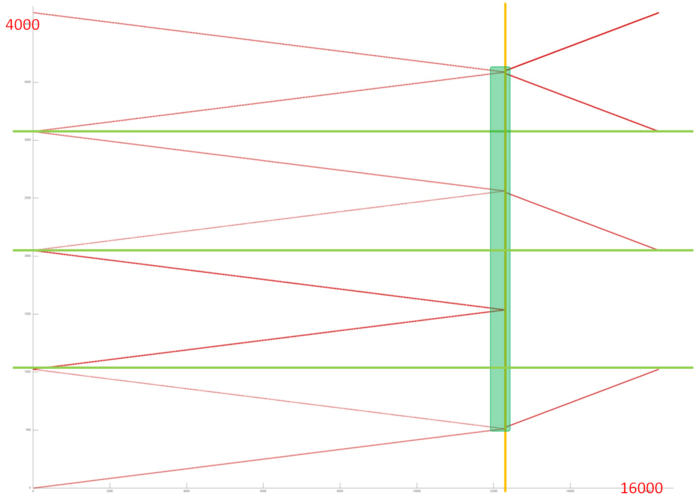

Figure 1 presents the memory access profile for an implementation of the pointwise version of two-sided elimination method. This profile consists of two stages separated by the yellow line. Four arrays are accessed at each stage. Accesses to different arrays are separated by green horizontal lines. Judging from the general profile, one can expect that about the same accesses occur at the first stage to all arrays. In each case, two concurrently executed serial accesses are formed. One of them is arranged in increasing order of the array entries, while the other is arranged in decreasing order.

At the second stage, profiles are different for different arrays; however, they again resemble conventional serial accesses. If this is true, then this profile has a high spatial locality (accesses to neighboring entries are close to each other) but a fairly low temporal locality (a repeated usage of the data is virtually absent). Let us look at the profile in more detail in order to verify our conclusions.

A fragment of the general profile (set out in green in fig. 1) is shown in fig. 2. One can see that the accesses to four arrays do indeed have a regular structure and neighboring entries are indeed accessed at close moments. It is, however, difficult to determine up to isolated accesses how these four separate profiles are structured.

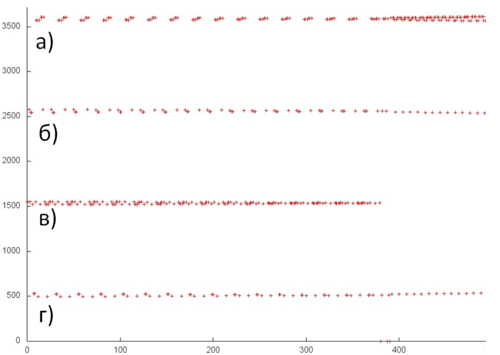

The profiles shown in fig. 2 are inspected more closely in fig. 3. At this scale, one can see that, on the whole, all the profiles do indeed consist of serial accesses, but a different number of references is made at each step of the access. The profile in fig. 3a references the current array element and the preceding one, while the profile in fig. 3b seems to reference also the next array element. The profiles 3c and 3d are structured somewhat simpler because only one serial access performs two references per step.

Based on information obtained, one can infer that the access locality is even better than what was suggested in the discussion of the general profile. The reason is that the same data are often used several times in succession, which improves both spatial and temporal locality.

2.1.2 Quantitative estimation of locality

The start-up parameters are described here.

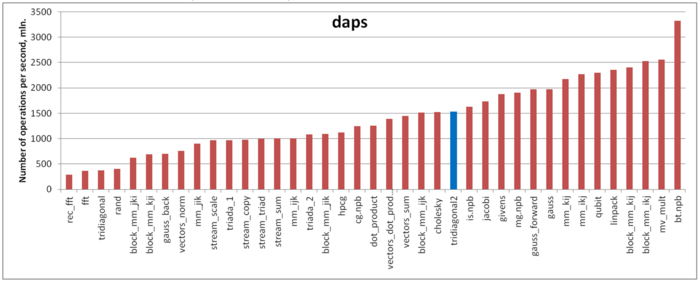

The first estimate is produced on the basis of daps, which estimates the number of memory accesses (reading and writing) per second. This characteristic, used for the interaction with memory, is an analog of the flops estimate. It largely estimates the performance of this interaction rather than locality. Nevertheless, this characteristic (in particular, its comparison with the next characteristic cvg) is a good source of information.

Figure 4 presents the values of daps for implementations of popular algorithms. They are arranged in increasing order (in general, the larger daps, the higher efficiency). On the whole, the daps value of this subroutine indicates a fairly high performance of memory interaction, which corresponds to our expectations stated in the locality description given above.

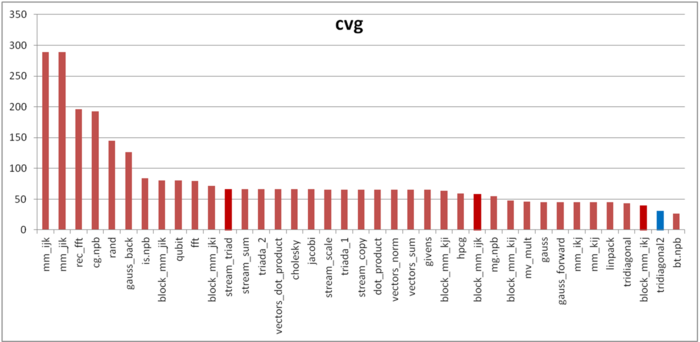

The second characteristic, called cvg, is intended for obtaining a locality estimate that would be more machine independent. It determines how often a program needs to fetch data to the cache memory. Accordingly, smaller values of cvg correspond to less often fetch operations and better locality.

Figure 5 presents the values of cvg for the same set of implementations. They are arranged in decreasing order (in general, the smaller cvg, the higher locality). The cvg value of this subroutine is quite high; it is even higher than in the case of the Linpack test. One can notice a marked difference between the daps and cvg estimates. It is possible that the reasons for this difference are the same as for the conventional elimination method. The latter are described here.

3 Scalability of the algorithm and its implementations

3.1 Scalability of the algorithm

There is no sense in discussing the scalability of the two-sided elimination method because it is almost non-parallel. The case of two-processor systems is a possible exception. The concept of scalability is inapplicable since no parallel implementation is intended for this algorithm.

3.2 Scalability of of the algorithm implementation

An analysis of scalability was performed for this algorithm as implemented in accordance with the methodology. The analysis was based on the experiments conducted on the Lomonosov supercomputer [2] of the Moscow University Supercomputing Center.

The variable start-up parameters of this implementation and their ranges are as follows:

- the number of processors is 1;

- the domain size varies in the range [10240 : 1024000] with the step 10240.

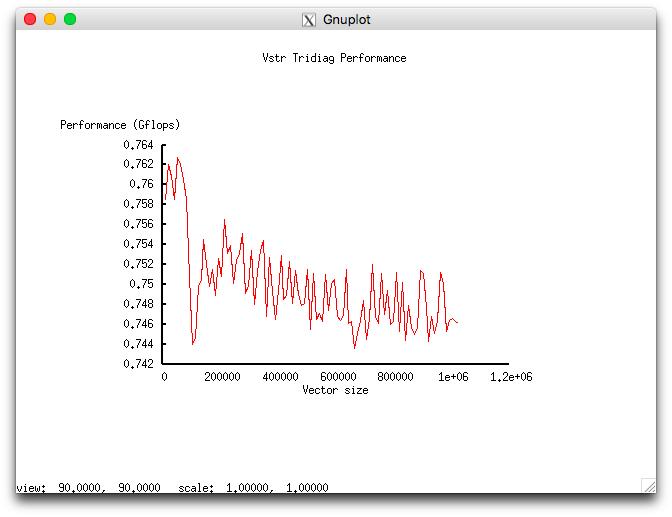

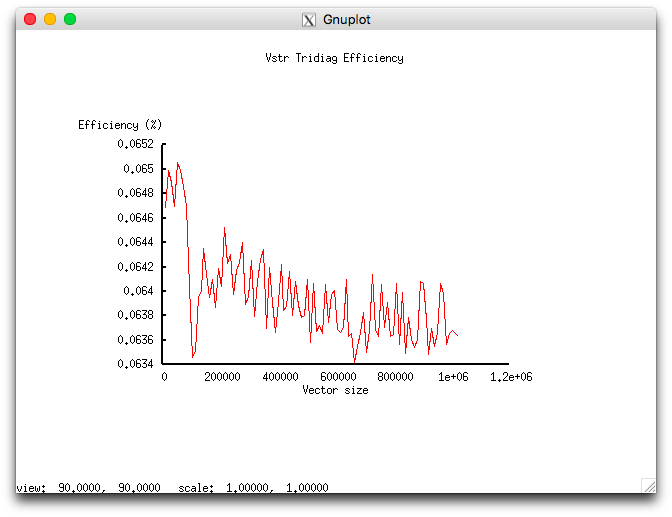

The experiments resulted in the following range for the implementation efficiency of this algorithm:

- the minimum efficiency is 0.0634%;

- the maximum efficiency is 0.0651%.

The following two figures show how the performance and efficiency of this implementation depend on the variable start-up parameters.

The meager efficiency is likely to be related to the excessive locality discussed in the section on the locality of data and computations.

4 Dynamic characteristics and efficiency of the algorithm implementation

In view of the serial nature of the algorithm and its excessive locality, the analysis of its dynamic characteristics seems to be of little value; therefore, it was not conducted.

5 Run results

6 References

- ↑ A.V. Frolov, A.S. Antonov, Vl.V. Voevodin, A.M. Teplov. Comparison of different methods for solving the same problem on the basis of Algowiki methodology (in Russian) // Submitted to the conference "Parallel computational technologies" (PaVT'2016)"

- ↑ Vl. Voevodin, S. Zhumatii, S. Sobolev, A. Antonov, P. Bryzgalov, D. Nikitenko, K. Stefanov, Vad. Voevodin. Supercomputer "Lomonosov": Our Experience // Open Systems, 2012, N 7, P. 36-39 (in Russian)