Horners method

| Horners method | |

| Sequential algorithm | |

| Serial complexity | [math]2 n^3[/math] |

| Input data | [math]n^2[/math] |

| Output data | [math]n^2[/math] |

| Parallel algorithm | |

| Parallel form height | [math]11n-16[/math] |

| Parallel form width | [math]O(n^2)[/math] |

Main authors: Alexey Frolov, Vadim Voevodin (part 2.2)

Contents

- 1 Properties and structure of the algorithm

- 1.1 General description of the algorithm

- 1.2 Mathematical description of the algorithm

- 1.3 Computational kernel of the algorithm

- 1.4 Macro structure of the algorithm

- 1.5 Implementation scheme of the serial algorithm

- 1.6 Serial complexity of the algorithm

- 1.7 Information graph

- 1.8 Parallelization resource of the algorithm

- 1.9 Input and output data of the algorithm

- 1.10 Properties of the algorithm

- 2 Software implementation of the algorithm

- 2.1 Implementation peculiarities of the serial algorithm

- 2.2 Locality of data and computations

- 2.3 Possible methods and considerations for parallel implementation of the algorithm

- 2.4 Scalability of the algorithm and its implementations

- 2.5 Dynamic characteristics and efficiency of the algorithm implementation

- 2.6 Conclusions for different classes of computer architecture

- 2.7 Existing implementations of the algorithm

- 3 References

1 Properties and structure of the algorithm

1.1 General description of the algorithm

1.1.1 Formulation of the problem

Horner’s scheme is devoted to the division of a polynomial [math]P_n(x)[/math] with known coefficients by the binomial [math]x - \alpha[/math]. The results of this operation are the coefficients of the polynomial [math]Q_{n - 1}(x)[/math] obtained by the relation

- [math]P_n(x) - P_n(\alpha) = (x - \alpha) Q_{n - 1}(x)[/math]

and the value [math]P_n(\alpha)[/math] of the polynomial [math]P_n(x)[/math] at a given point [math]\alpha[/math].

Unfortunately, Horner’s method is often reduced only to the calculation of a polynomial [math]P_n(x)[/math] at the point [math]\alpha[/math].

1.1.2 General scheme

Actually, Horner’s scheme implements the long division of a polynomial by the binomial [math]x - \alpha[/math]. By the Bezout theorem, the remainder of this operation is equal to the value of a polynomial [math]P_n(x)[/math] at a point [math]\alpha[/math].

1.2 Mathematical description of the algorithm

Input data: a one-dimensional array consisting of [math]n + 1[/math] numbers [math]a_k[/math] and a scalar [math]\alpha[/math].

Output data: a one-dimensional array consisting of [math]n + 1[/math] numbers [math]b_k[/math].

The formulas of Horner's method are based on the relation

- [math]P_n(x) - P_n(\alpha) = (x - \alpha) Q_{n - 1}(x)[/math].

Now we represent the above polynomials in canonical form:

- [math] \begin{align} P_n(x) & = a_0 x^n+ a_1 x^{n - 1} + \dots + a_{n - 1} x + a_n, \\ Q_{n - 1}(x) & = b_0 x^{n - 1} + b_1 x^{n - 2} + \dots + b_{n - 2} x + b_{n - 1}. \end{align} [/math]

By [math]b_n[/math] we denote [math]P_n(\alpha)[/math]. Substituting these polynomials in their canonical form into the above relation and equating the coefficients at equal powers of [math]x[/math], we come to the following formulas of Horner’s scheme:

- [math] \begin{align} b_0 & = a_0, \\ b_k & = a_k + \alpha b_{k - 1}, \quad k = 1, \dots, n. \end{align} [/math]

1.3 Computational kernel of the algorithm

The computational kernel of Horner’s algorithm in its serial version can be represented as a sequential set of [math]n[/math] «double» operations: the multiplication of elements of the output array by one and the same scalar and the addition of the result to the next element of the input array.

1.4 Macro structure of the algorithm

From the computational kernel of Horner’s algorithm it follows that its major part consists of the sequential set of the above «double» operations.

1.5 Implementation scheme of the serial algorithm

The formulas of the algorithm are described above. The operations are performed in increasing order of [math]k[/math].

1.6 Serial complexity of the algorithm

For a polynomial of degree [math]n[/math], the number of multiplications is equal to [math]n[/math] and the number of additions is also equal to [math]n[/math]. Hence, Horner’s algorithm is of linear complexity with respect to the number of serial operations.

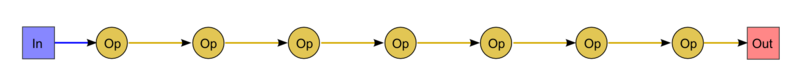

1.7 Information graph

The graph of the algorithm is illustrated in Fig.1 for [math]n = 9[/math]. As can be seen, the graph is serial.

Here the abbreviations In (Input) and Out (Output) are used to denote the first coefficient of the input array and the value of the polynomial at a given point [math]\alpha[/math], respectively. By Op we denote the "double" operation: the multiplication of an input variable by a scalar and the addition of the result to another variable.

1.8 Parallelization resource of the algorithm

The serial version of Horner’s algorithm has no parallelization resource. Its parallel form consists of a single layer and is coincident with the serial algorithm. Thus, the height of the parallel form is equal to [math]n[/math] multiplications plus [math]n[/math] additions. Hence, this algorithm is of linear complexity with respect to the height of the parallel form. The width of the parallel form is equal to [math]1[/math]; hence, this algorithm is of constant complexity with respect to the width of the parallel form.

1.9 Input and output data of the algorithm

Input data: an array [math]a[/math] (its elements are denoted by [math]a_i[/math], where [math]i = 0, \dots, n[/math]) and a scalar [math]\alpha[/math].

Additional constraints: absent.

Amount of input data: [math]n + 2[/math].

Output data: an array [math]b[/math] (its elements are denoted by [math]b_k[/math], where [math]k = 0, \dots, n[/math])

Amount of output data: [math]n + 1[/math].

1.10 Properties of the algorithm

In the case of unlimited computer resources, the ratio of the serial complexity to the parallel complexity is constant (1). Computational power of the algorithm considered as the ratio of the number of operations to the total amount of input and output data is equal to 1 (the number of input and output data is even larger by 1 than the number of operations). The algorithm is completely deterministic. The arcs of the information graph are local. The stability of Horner’s scheme is optimal for the calculation of the values of a polynomial with known coefficient.

2 Software implementation of the algorithm

2.1 Implementation peculiarities of the serial algorithm

Horner’s scheme can be represented in Fortran as

b(0) = a(0)

DO I = 1, N

b(I) = a(I)+b(I-1)*alpha

END DO

2.2 Locality of data and computations

2.2.1 Locality of implementation

2.2.1.1 Structure of memory access and a qualitative estimation of locality

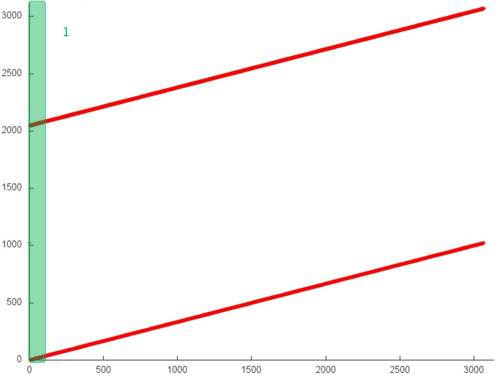

The memory-access profile is illustrated in Fig.2 for a serial implementation of Horner’s scheme in the case of polynomials with real coefficients. From this figure it follows that the structure of this profile is very simple and consists of two linear (or sequential) searches of elements performed for two real arrays in parallel.

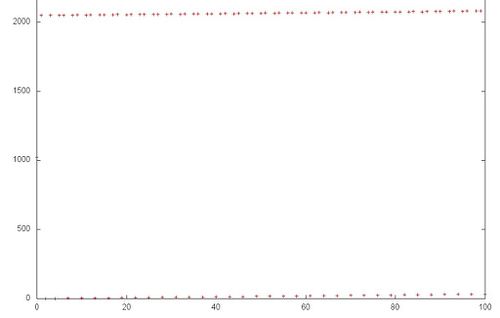

However, this simple example shows that, in order to completely understand a general structure of memory access, it is necessary to carefully analyze this structure at the level of individual memory references. Fig.3 illustrates fragment 1 highlighted in green in Fig.2. As can be seen, the upper sequential search is somewhat different from the lower one: in the first case, each element is referenced twice in succession. Note, however, this refinement of the memory-access profile structure has a little effect on the locality of the entire profile.

On the whole, the general memory-access profile possesses a high spatial locality, since the search of elements in the arrays is performed sequentially. However, the temporal locality is very low, since each element of one of the arrays is referenced only twice, whereas the elements of the second array are not reused at all.

2.2.1.2 Quantitative estimation of locality

The main fragment of the implementation used to obtain the quantitative estimates is given here (the KernelHorner function). The start conditions are discussed here.

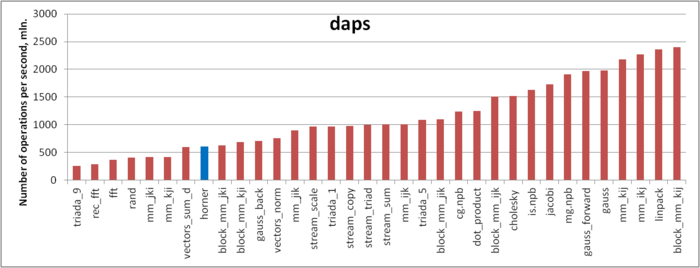

The first estimate is made on the basis of the daps characteristic used to evaluate the number of write and read operations per second. This characteristic is similar to the flops estimate for memory access and is an estimate of the memory usage performance rather than an estimate of locality. However, the daps characteristic is a good information source and can be used to compare with the results obtained according to the cvg characteristic.

Figure 4 illustrates the daps values for a number of some widespread algorithms. These values are given in increasing order: a higher performance corresponds to a larger daps value. From this figure it follows that the implementation of Horner’s scheme is characterized by a low memory usage performance. It may appear strange that in this case the daps value is significantly less than for the STREAM tests, although the memory-access profiles are similar: several simultaneous sequential searches for the elements of arrays.

Such a behaviour of the implementation under study is caused by the peculiarities of the memory structure. As mentioned above, the elements of one of the arrays are referenced twice in succession. In the source code of the implementation, however, the second reference is performed at the next iteration to the previous element:

for (int i = 1; i < size; i++) {

c[i] = a[i] + c[i - 1] * x;

}

Hence, we have the case when the iterations are dependent. As a result, the hardware prefetcher badly prefetches the required cache lines, which leads to a significant slowing of the program speed compared to the other implementations based on the sequential search (for example, the STREAM tests).

Our discussion shows once more that the memory structure is very complex: a minor change made in the loop body leads to an unexpectedly serious slowing of the program.

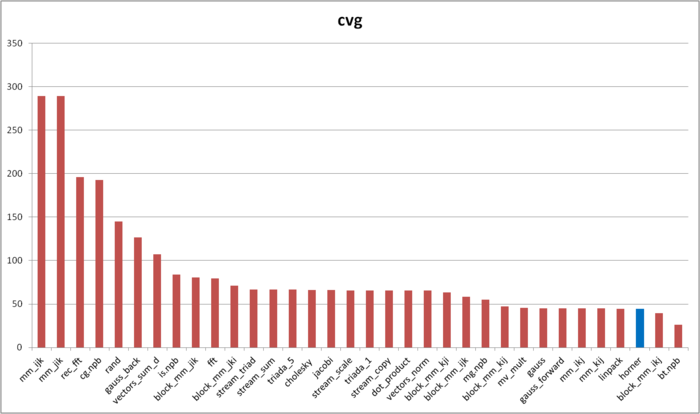

The cvg characteristic is used to obtain a more machine-independent estimate of locality and to specify the frequency of fetching data to the cache memory. A lesser cvg value corresponds to a higher level of locality and to a smaller number of using the fetching procedure.

Fig.5 shows the cvg values for the same implementations presented in Fig.4. These values are given in decreasing order: a higher locality level corresponds to a smaller cvg value. From this figure it follows that the implementation of Horner’s scheme possesses a very high locality according to the cvg estimate.

As shown above, this conclusion does not correspond to the actual memory-access performance because of the peculiarities of the memory structure. However, it is necessary to state the following two remarks. First, the cases when the memory-access performance strongly depends on the hardware peculiarities of the memory structure are not very often in practice. Second, the cvg characteristic is used to obtain a machine-independent estimate of locality; hence, it is not possible to take into account such hardware peculiarities when the analysis of memory access is performed in the current framework of discussion.

2.3 Possible methods and considerations for parallel implementation of the algorithm

This algorithm is not designed to be implemented in parallel.

2.4 Scalability of the algorithm and its implementations

Scalability is not analyzed, since Horner’s algorithm is not designed to be implemented in parallel.

2.5 Dynamic characteristics and efficiency of the algorithm implementation

2.6 Conclusions for different classes of computer architecture

2.7 Existing implementations of the algorithm

In addition to the above simplest implementation, there exist more primitive codes implementing only a part of Horner’s scheme. This can be explained by the fact that in many cases it is necessary to know the value of a polynomial (the remainder of division), but it is not necessary to know only the quotient polynomial with the remainder dropped. In such cases one and the same scalar should be used instead of all the elements of the array [math]b[/math].