Difference between revisions of "Stone doubling algorithm for the LU decomposition of a tridiagonal matrix"

| [unchecked revision] | [checked revision] |

| (29 intermediate revisions by 2 users not shown) | |||

| Line 8: | Line 8: | ||

}} | }} | ||

| − | Main | + | Main author: [[:ru:Участник:Frolov|Alexey Frolov]] |

== Properties and structure of the algorithm == | == Properties and structure of the algorithm == | ||

| Line 14: | Line 14: | ||

=== General description of the algorithm === | === General description of the algorithm === | ||

| − | The '''Stone doubling algorithm for the LU decomposition of tri-diagonal matrices''' is a part of the [[ | + | The '''Stone doubling algorithm for the LU decomposition of tri-diagonal matrices''' is a part of the [[Stone doubling algorithm]] for solving SLAEs <ref name="VOLA">Voevodin V.V. Computational foundations of linear algebra Moscow: Nauka, 1977.</ref><ref name="MIV">Voevodin V.V., Kuznetsov Yu.A. Matrices and computations, Moscow: Nauka, 1984.</ref> of the form <math>Ax = b</math>, where |

{{Template:Standard tridiagonal SLAE}} | {{Template:Standard tridiagonal SLAE}} | ||

| − | The [[Stone doubling algorithm]] was first proposed in early 70's of 20-th century <ref name="STONE">Stone H.S. An Efficient Parallel Algorithm for the Solution of a Tridiagonal Linear System of Equations // J. ACM, Vol. 20, No. 1 (Jan. 1973), P. 27-38.</ref> as an alternative to other parallel | + | The [[Stone doubling algorithm]] was first proposed in early 70's of 20-th century <ref name="STONE">Stone H.S. An Efficient Parallel Algorithm for the Solution of a Tridiagonal Linear System of Equations // J. ACM, Vol. 20, No. 1 (Jan. 1973), P. 27-38.</ref> as an alternative to other parallel algorithms for solving tri-diagonal SLAEs such as, for instance, the [[Complete cyclic reduction|cyclic reduction method]]. |

| − | + | Here, we consider the first part of the algorithm that concerns the <math>LU</math> decomposition. The aim is to represent the matrix | |

{{Template:Standard tridiagonal matrix}} | {{Template:Standard tridiagonal matrix}} | ||

| − | + | as the product of the matrices | |

{{Template:L2dUniDiag}} | {{Template:L2dUniDiag}} | ||

| − | + | and | |

{{Template:U2dCommon}} | {{Template:U2dCommon}} | ||

| − | + | An important point is the fact that, in exact arithmetic, the Stone doubling algorithm finds the same decomposition as that in the [[Gaussian elimination, compact scheme for tridiagonal matrices, serial version|compact scheme of Gaussian elimination]]. | |

| − | + | Theoretically, the Stone method is based on the following considerations. Define the values <math>q_i</math> by the formulas <math>q_0 = 1</math>, <math>q_1 = a_{11}</math>, and <math>q_i = a_{ii} q_{i-1} - a_{ii-1}a_{i-1i} q_{i-2}</math>. As soon as all the <math>q_i</math> are calculated, one can easily find all the entries <math>u_{ii} = q_i/q_{i-1}</math> and <math>l_{ii-1} = a_{ii-1}/u_{i-1i-1}</math>. The entries <math>u_{ii+1} = a_{ii+1}</math> need not be calculated. | |

| − | + | When calculating the values <math>q_i</math>, one uses the associativity of matrix multiplication. The recursion relation <math>q_i = a_{ii} q_{i-1} - a_{ii-1}a_{i-1i} q_{i-2}</math> implies the matrix equality | |

:<math> | :<math> | ||

| Line 60: | Line 60: | ||

</math> | </math> | ||

| − | + | Matrix products <math>T_i T_{i-1}...T_2</math> are calculated using the parallel doubling scheme. Note that the second row of each matrix <math>T_i</math> is identical to the first row of the identity matrix. It follows that the second row of the product <math>T_i T_{i-1}...T_k</math> is identical to the first row of the product | |

| − | <math>T_{i-1}...T_k</math>, | + | <math>T_{i-1}...T_k</math>, which makes it possible to halve the number of arithmetic operations compared to the analogous product of general matrices of the same order . |

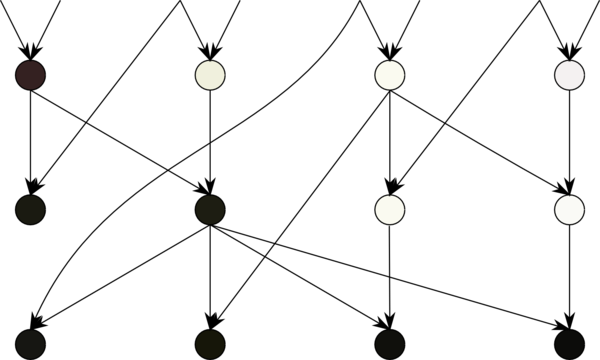

| − | [[file:DoublingPartUniver.png|thumb|right|600px| | + | [[file:DoublingPartUniver.png|thumb|right|600px|Figure 1. Graph of calculating the product of the matrices <math>T_{i}</math> for <math>n=9</math>. Each node at the first layer corresponds to a composite operation consisting of a single multiplication and a single operation of the type <math>a+bc</math>, while each node at other layers corresponds to two operations of the type <math>ab+cd</math>. Results calculated by black nodes are used at later stages, and intermediate results are calculated by light nodes]] |

=== Mathematical description of the algorithm === | === Mathematical description of the algorithm === | ||

| − | + | First, for all <math>i</math> from 2 to <math>n</math>, we set | |

<math>p_i = a_{ii}, r_i = -a_{ii-1}a_{i-1i}</math>, | <math>p_i = a_{ii}, r_i = -a_{ii-1}a_{i-1i}</math>, | ||

| − | + | and | |

<math>p_1 = 0, r_1 = 1</math>. | <math>p_1 = 0, r_1 = 1</math>. | ||

| − | + | At step <math>k</math>, the calculations are conducted as follows: | |

| − | + | Beginning from <math>i</math> = 2, skip <math>2^{k-1}</math> numbers; for the next <math>2^{k-1}</math> numbers, set <math>j</math> equal to the nearest smaller integer of the form <math>j = 1 + 2^{k} m </math>. | |

| − | |||

| − | + | At the first step, the values <math>p_i, r_i</math> are updated by the formulas | |

| − | <math>p^{new}_i = p_i p_{i-1}, r^{new}_i = p_i r_{i-1} + r_i</math>, | + | <math>p^{new}_i = p_i p_{i-1}, r^{new}_i = p_i r_{i-1} + r_i</math>; at the other steps, they are updated using the formulas <math>p^{new}_i = p_i p_{j}+r_i p_{j-1}, r^{new}_i = p_i r_{j} + r_i r_{j-1}</math>. |

| − | |||

| − | + | Then, again, <math>2^{k-1}</math> numbers are skipped, and, for the next <math>2^{k-1}</math> numbers, calculations similar to those above are performed, and so on until the range of <math>i</math> from 2 to <math>n</math> is run out. | |

| − | + | The above steps are repeated until it occurs that all the values of <math>i</math> from 2 to <math>n</math> should be skipped. After that, one calculates | |

| − | + | <math>q_i = p_i a_{11} + r_i</math> for all <math>i</math> from 1 to <math>n</math>, then <math>u_{ii} = q_i/q_{i-1}</math> and <math>l_{ii-1} = a_{ii-1}/u_{i-1i-1}</math> for all <math>i</math> from 2 to <math>n</math>. | |

| − | <math>q_i = p_i a_{11} + r_i</math> | ||

=== Computational kernel of the algorithm === | === Computational kernel of the algorithm === | ||

| − | + | The computational kernel of the algorithm is mainly composed of operations of the type <math>ab+cd</math> and <math>ab+c</math> with a small addition of multiplications and divisions. There are no isolated long sequences of calculations. | |

=== Macro structure of the algorithm === | === Macro structure of the algorithm === | ||

| − | + | At the macro-level, the following macro-operations can be set out: 1. Calculation of the entries of the matrices <math>T_i</math>. 2. Calculation of the products of the matrices <math>T_{i}</math> using the doubling scheme. 3. Calculation of the ultimate results. | |

=== Implementation scheme of the serial algorithm === | === Implementation scheme of the serial algorithm === | ||

| − | + | The Stone method was from the outset designed for a parallel implementation because it involves redundant calculations compared to, say, the classical Thomas algorithm. A serial implementation of the method makes no sense also for the reason that it is numerically unstable. | |

=== Serial complexity of the algorithm === | === Serial complexity of the algorithm === | ||

| − | + | For a full execution of the Stone algorithm resulting in the decomposition of the given tri-diagonal matrix into the product of two bi-diagonal matrices, one should perform | |

| − | * <math>2n-2</math> | + | * <math>2n-2</math> divisions, |

| − | * <math>2(n-1)\lceil \log_2 (n-1) \rceil + o((n-1)\lceil \log_2 (n-1) \rceil)</math> | + | * <math>2(n-1)\lceil \log_2 (n-1) \rceil + o((n-1)\lceil \log_2 (n-1) \rceil)</math> multiplications, and |

| − | * <math>(n-1)\lceil \log_2 (n-1) \rceil +o((n-1)\lceil \log_2 (n-1) \rceil)</math> | + | * <math>(n-1)\lceil \log_2 (n-1) \rceil +o((n-1)\lceil \log_2 (n-1) \rceil)</math> additions. |

| − | + | Consequently, in terms of serial complexity, the algorithm should be qualified as a ''linear-logarithmic complexity'' algorithm. | |

=== Information graph === | === Information graph === | ||

| − | + | As already noted, the macro-structure of the algorithm consists of three parts. | |

| − | + | In the first part, off-diagonal entries of the matrices <math>T_{i}</math> are calculated. | |

| − | + | In the second part, products of the matrices <math>T_{i}</math> are calculated using the doubling technique. This calculation is shown in Figure 1; it uses the results of the first part. | |

| − | + | In the concluding third part, the final results are calculated with the use of the results of the second part. | |

| − | + | The inner graphs of the first and third parts are empty; their operations are only related to the second part. | |

=== Parallelization resource of the algorithm === | === Parallelization resource of the algorithm === | ||

| − | + | The critical path of the Stone algorithm for the LU decomposition of tri-diagonal matrices consists of | |

| + | * <math>1</math> layer of divisions, | ||

| + | * <math>\lceil \log_2 (n-1) \rceil+1</math> layers of multiplications, and | ||

| + | * <math>\lceil \log_2 (n-1) \rceil+1</math> layers of additions. | ||

| − | + | Consequently, in terms of the parallel form height, the algorithm should be qualified as a ''logarithmic complexity'' algorithm. The width of a layer is <math>n</math>; therefore, in terms of the parallel form width, the algorithm should be qualified as a ''linear complexity'' algorithm. | |

| − | |||

| − | |||

| − | |||

| − | |||

=== Input and output data of the algorithm === | === Input and output data of the algorithm === | ||

| − | ''' | + | '''Input data''': tri-diagonal matrix <math>A</math> (with entries <math>a_{ij}</math>). |

| − | ''' | + | '''Size of the input data''': <math>3n-2</math>. In different implementations, information can be stored differently for economy reasons. For instance, each diagonal of the matrix can be stored by a row of an array. |

| − | ''' | + | '''Output data''': lower bi-diagonal matrix <math>L</math> (with entries <math>l_{ij}</math>, where <math>l_{ii}=1</math>) and upper bi-diagonal matrix <math>U</math> (with entries <math>u_{ij}</math>). |

| − | ''' | + | '''Size of the output data''' is formally <math>3n-2</math>. However, since <math>n</math> |

| + | items in the input and output data are identical, actually only <math>2n-2</math> items are calculated. | ||

=== Properties of the algorithm === | === Properties of the algorithm === | ||

| − | + | It is clearly seen that the ratio of the serial to parallel complexity is <math>O(n)</math>. | |

| − | + | The computational power of the algorithm, understood as the ratio of the number of operations to the total size of the input and output data, is ''logarithmic''. | |

| − | + | The algorithm, within the chosen version, is completely determined. | |

| − | + | The algorithm is numerically stable only if the leading principal minors of the matrix do not grow. If they can grow significantly, then the stability region of the algorithm is very narrow. | |

== Software implementation of the algorithm == | == Software implementation of the algorithm == | ||

| Line 158: | Line 155: | ||

=== Implementation peculiarities of the serial algorithm === | === Implementation peculiarities of the serial algorithm === | ||

| − | + | Due to its great redundancy in calculations, the Stone doubling algorithm never was targeted on a serial implementation. After its numerical instability had been detected, it became clear that, in the future, it also will not be implemented on serial architectures. | |

| − | |||

| − | |||

| − | |||

| − | |||

=== Possible methods and considerations for parallel implementation of the algorithm === | === Possible methods and considerations for parallel implementation of the algorithm === | ||

| − | + | Because of numerical instability, any implementations of the algorithm make no sense. | |

| − | + | The numerical instability of the algorithm makes senseless any analysis of locality. Nevertheless, the algorithm graph shows the presence of a number of arcs that are long both in time (meaning the difference between the indices of layers to which the endpoints of an arc belong) and in space (excluding the allocation in a hypercube, which is physically impossible). This inherent non-locality must hamper the execution of the algorithm. An actual analysis of memory accesses for a serial code makes no sense because such a code is not used now and will not be used by anybody in the future. | |

| − | + | The numerical instability of the algorithm makes senseless any measurements of the efficiency and dynamic characteristics of its implementations. Moreover, the presence of redundant calculations significantly limits the actual efficiency even for small size problems, which are not yet affected by instability. | |

| − | + | Due to its numerical instability, the algorithm is not used in practice. According to the original publication <ref name="STONE" />, it was designed to replace the more popular [[Complete_cyclic_reduction|cyclic reduction]]. However, on that score, the algorithm has failed. | |

| − | |||

| − | |||

| + | === Run results === | ||

=== Conclusions for different classes of computer architecture === | === Conclusions for different classes of computer architecture === | ||

| − | + | Even in the range of reasonable round-off errors (small size problems), an efficient implementation of the method is impossible. A faster approach would be to solve a SLAE on a common PC using the Thomas algorithm. | |

| − | === | + | == References == |

| − | |||

| − | |||

| − | |||

<references /> | <references /> | ||

[[Category:Finished articles]] | [[Category:Finished articles]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

[[ru:Алгоритм сдваивания Стоуна для LU-разложения трёхдиагональной матрицы]] | [[ru:Алгоритм сдваивания Стоуна для LU-разложения трёхдиагональной матрицы]] | ||

Latest revision as of 15:51, 8 July 2022

| Stone doubling algorithm for the LU decomposition of tridiagonal matrices | |

| Sequential algorithm | |

| Serial complexity | [math]3(n-1)\lceil \log_2 (n-1) \rceil + o((n-1)\lceil \log_2 (n-1) \rceil)[/math] |

| Input data | [math]3n-2[/math] |

| Output data | [math]2n-1[/math] |

| Parallel algorithm | |

| Parallel form height | [math]2 \lceil \log_2 (n-1) \rceil + 3[/math] |

| Parallel form width | [math]n[/math] |

Main author: Alexey Frolov

Contents

- 1 Properties and structure of the algorithm

- 1.1 General description of the algorithm

- 1.2 Mathematical description of the algorithm

- 1.3 Computational kernel of the algorithm

- 1.4 Macro structure of the algorithm

- 1.5 Implementation scheme of the serial algorithm

- 1.6 Serial complexity of the algorithm

- 1.7 Information graph

- 1.8 Parallelization resource of the algorithm

- 1.9 Input and output data of the algorithm

- 1.10 Properties of the algorithm

- 2 Software implementation of the algorithm

- 3 References

1 Properties and structure of the algorithm

1.1 General description of the algorithm

The Stone doubling algorithm for the LU decomposition of tri-diagonal matrices is a part of the Stone doubling algorithm for solving SLAEs [1][2] of the form [math]Ax = b[/math], where

- [math] A = \begin{bmatrix} a_{11} & a_{12} & 0 & \cdots & \cdots & 0 \\ a_{21} & a_{22} & a_{23}& \cdots & \cdots & 0 \\ 0 & a_{32} & a_{33} & \cdots & \cdots & 0 \\ \vdots & \vdots & \ddots & \ddots & \ddots & 0 \\ 0 & \cdots & \cdots & a_{n-1 n-2} & a_{n-1 n-1} & a_{n-1 n} \\ 0 & \cdots & \cdots & 0 & a_{n n-1} & a_{n n} \\ \end{bmatrix}, x = \begin{bmatrix} x_{1} \\ x_{2} \\ \vdots \\ x_{n} \\ \end{bmatrix}, b = \begin{bmatrix} b_{1} \\ b_{2} \\ \vdots \\ b_{n} \\ \end{bmatrix} [/math]

The Stone doubling algorithm was first proposed in early 70's of 20-th century [3] as an alternative to other parallel algorithms for solving tri-diagonal SLAEs such as, for instance, the cyclic reduction method.

Here, we consider the first part of the algorithm that concerns the [math]LU[/math] decomposition. The aim is to represent the matrix

- [math] A = \begin{bmatrix} a_{11} & a_{12} & 0 & \cdots & \cdots & 0 \\ a_{21} & a_{22} & a_{23}& \cdots & \cdots & 0 \\ 0 & a_{32} & a_{33} & \cdots & \cdots & 0 \\ \vdots & \vdots & \ddots & \ddots & \ddots & 0 \\ 0 & \cdots & \cdots & a_{n-1 n-2} & a_{n-1 n-1} & a_{n-1 n} \\ 0 & \cdots & \cdots & 0 & a_{n n-1} & a_{n n} \\ \end{bmatrix} [/math]

as the product of the matrices

- [math] L = \begin{bmatrix} 1 & 0 & 0 & \cdots & \cdots & 0 \\ l_{21} & 1 & 0 & \cdots & \cdots & 0 \\ 0 & l_{32} & 1 & \cdots & \cdots & 0 \\ \vdots & \vdots & \ddots & \ddots & \ddots & 0 \\ 0 & \cdots & \cdots & l_{n-1 n-2} & 1 & 0 \\ 0 & \cdots & \cdots & 0 & l_{n n-1} & 1 \\ \end{bmatrix} [/math]

and

- [math] U = \begin{bmatrix} u_{11} & u_{12} & 0 & \cdots & \cdots & 0 \\ 0 & u_{22} & u_{23}& \cdots & \cdots & 0 \\ 0 & 0 & u_{33} & \cdots & \cdots & 0 \\ \vdots & \vdots & \ddots & \ddots & \ddots & 0 \\ 0 & \cdots & \cdots & 0 & u_{n-1 n-1} & u_{n-1 n} \\ 0 & \cdots & \cdots & 0 & 0 & u_{n n} \\ \end{bmatrix} [/math]

An important point is the fact that, in exact arithmetic, the Stone doubling algorithm finds the same decomposition as that in the compact scheme of Gaussian elimination.

Theoretically, the Stone method is based on the following considerations. Define the values [math]q_i[/math] by the formulas [math]q_0 = 1[/math], [math]q_1 = a_{11}[/math], and [math]q_i = a_{ii} q_{i-1} - a_{ii-1}a_{i-1i} q_{i-2}[/math]. As soon as all the [math]q_i[/math] are calculated, one can easily find all the entries [math]u_{ii} = q_i/q_{i-1}[/math] and [math]l_{ii-1} = a_{ii-1}/u_{i-1i-1}[/math]. The entries [math]u_{ii+1} = a_{ii+1}[/math] need not be calculated.

When calculating the values [math]q_i[/math], one uses the associativity of matrix multiplication. The recursion relation [math]q_i = a_{ii} q_{i-1} - a_{ii-1}a_{i-1i} q_{i-2}[/math] implies the matrix equality

- [math] \begin{bmatrix} q_i \\ q_{i-1} \\ \end{bmatrix} = \begin{bmatrix} a_{ii} & -a_{ii-1}a_{i-1i} \\ 1 & 0 \\ \end{bmatrix} \begin{bmatrix} q_{i-1} \\ q_{i-2} \\ \end{bmatrix} = T_i \begin{bmatrix} q_{i-1} \\ q_{i-2} \\ \end{bmatrix} = T_i T_{i-1}...T_2 \begin{bmatrix} q_{1} \\ q_{0} \\ \end{bmatrix} = T_i T_{i-1}...T_2 \begin{bmatrix} a_{11} \\ 1 \\ \end{bmatrix} [/math]

Matrix products [math]T_i T_{i-1}...T_2[/math] are calculated using the parallel doubling scheme. Note that the second row of each matrix [math]T_i[/math] is identical to the first row of the identity matrix. It follows that the second row of the product [math]T_i T_{i-1}...T_k[/math] is identical to the first row of the product [math]T_{i-1}...T_k[/math], which makes it possible to halve the number of arithmetic operations compared to the analogous product of general matrices of the same order .

1.2 Mathematical description of the algorithm

First, for all [math]i[/math] from 2 to [math]n[/math], we set

[math]p_i = a_{ii}, r_i = -a_{ii-1}a_{i-1i}[/math],

and

[math]p_1 = 0, r_1 = 1[/math].

At step [math]k[/math], the calculations are conducted as follows:

Beginning from [math]i[/math] = 2, skip [math]2^{k-1}[/math] numbers; for the next [math]2^{k-1}[/math] numbers, set [math]j[/math] equal to the nearest smaller integer of the form [math]j = 1 + 2^{k} m [/math].

At the first step, the values [math]p_i, r_i[/math] are updated by the formulas [math]p^{new}_i = p_i p_{i-1}, r^{new}_i = p_i r_{i-1} + r_i[/math]; at the other steps, they are updated using the formulas [math]p^{new}_i = p_i p_{j}+r_i p_{j-1}, r^{new}_i = p_i r_{j} + r_i r_{j-1}[/math].

Then, again, [math]2^{k-1}[/math] numbers are skipped, and, for the next [math]2^{k-1}[/math] numbers, calculations similar to those above are performed, and so on until the range of [math]i[/math] from 2 to [math]n[/math] is run out.

The above steps are repeated until it occurs that all the values of [math]i[/math] from 2 to [math]n[/math] should be skipped. After that, one calculates [math]q_i = p_i a_{11} + r_i[/math] for all [math]i[/math] from 1 to [math]n[/math], then [math]u_{ii} = q_i/q_{i-1}[/math] and [math]l_{ii-1} = a_{ii-1}/u_{i-1i-1}[/math] for all [math]i[/math] from 2 to [math]n[/math].

1.3 Computational kernel of the algorithm

The computational kernel of the algorithm is mainly composed of operations of the type [math]ab+cd[/math] and [math]ab+c[/math] with a small addition of multiplications and divisions. There are no isolated long sequences of calculations.

1.4 Macro structure of the algorithm

At the macro-level, the following macro-operations can be set out: 1. Calculation of the entries of the matrices [math]T_i[/math]. 2. Calculation of the products of the matrices [math]T_{i}[/math] using the doubling scheme. 3. Calculation of the ultimate results.

1.5 Implementation scheme of the serial algorithm

The Stone method was from the outset designed for a parallel implementation because it involves redundant calculations compared to, say, the classical Thomas algorithm. A serial implementation of the method makes no sense also for the reason that it is numerically unstable.

1.6 Serial complexity of the algorithm

For a full execution of the Stone algorithm resulting in the decomposition of the given tri-diagonal matrix into the product of two bi-diagonal matrices, one should perform

- [math]2n-2[/math] divisions,

- [math]2(n-1)\lceil \log_2 (n-1) \rceil + o((n-1)\lceil \log_2 (n-1) \rceil)[/math] multiplications, and

- [math](n-1)\lceil \log_2 (n-1) \rceil +o((n-1)\lceil \log_2 (n-1) \rceil)[/math] additions.

Consequently, in terms of serial complexity, the algorithm should be qualified as a linear-logarithmic complexity algorithm.

1.7 Information graph

As already noted, the macro-structure of the algorithm consists of three parts.

In the first part, off-diagonal entries of the matrices [math]T_{i}[/math] are calculated.

In the second part, products of the matrices [math]T_{i}[/math] are calculated using the doubling technique. This calculation is shown in Figure 1; it uses the results of the first part.

In the concluding third part, the final results are calculated with the use of the results of the second part.

The inner graphs of the first and third parts are empty; their operations are only related to the second part.

1.8 Parallelization resource of the algorithm

The critical path of the Stone algorithm for the LU decomposition of tri-diagonal matrices consists of

- [math]1[/math] layer of divisions,

- [math]\lceil \log_2 (n-1) \rceil+1[/math] layers of multiplications, and

- [math]\lceil \log_2 (n-1) \rceil+1[/math] layers of additions.

Consequently, in terms of the parallel form height, the algorithm should be qualified as a logarithmic complexity algorithm. The width of a layer is [math]n[/math]; therefore, in terms of the parallel form width, the algorithm should be qualified as a linear complexity algorithm.

1.9 Input and output data of the algorithm

Input data: tri-diagonal matrix [math]A[/math] (with entries [math]a_{ij}[/math]).

Size of the input data: [math]3n-2[/math]. In different implementations, information can be stored differently for economy reasons. For instance, each diagonal of the matrix can be stored by a row of an array.

Output data: lower bi-diagonal matrix [math]L[/math] (with entries [math]l_{ij}[/math], where [math]l_{ii}=1[/math]) and upper bi-diagonal matrix [math]U[/math] (with entries [math]u_{ij}[/math]).

Size of the output data is formally [math]3n-2[/math]. However, since [math]n[/math] items in the input and output data are identical, actually only [math]2n-2[/math] items are calculated.

1.10 Properties of the algorithm

It is clearly seen that the ratio of the serial to parallel complexity is [math]O(n)[/math].

The computational power of the algorithm, understood as the ratio of the number of operations to the total size of the input and output data, is logarithmic.

The algorithm, within the chosen version, is completely determined.

The algorithm is numerically stable only if the leading principal minors of the matrix do not grow. If they can grow significantly, then the stability region of the algorithm is very narrow.

2 Software implementation of the algorithm

2.1 Implementation peculiarities of the serial algorithm

Due to its great redundancy in calculations, the Stone doubling algorithm never was targeted on a serial implementation. After its numerical instability had been detected, it became clear that, in the future, it also will not be implemented on serial architectures.

2.2 Possible methods and considerations for parallel implementation of the algorithm

Because of numerical instability, any implementations of the algorithm make no sense.

The numerical instability of the algorithm makes senseless any analysis of locality. Nevertheless, the algorithm graph shows the presence of a number of arcs that are long both in time (meaning the difference between the indices of layers to which the endpoints of an arc belong) and in space (excluding the allocation in a hypercube, which is physically impossible). This inherent non-locality must hamper the execution of the algorithm. An actual analysis of memory accesses for a serial code makes no sense because such a code is not used now and will not be used by anybody in the future.

The numerical instability of the algorithm makes senseless any measurements of the efficiency and dynamic characteristics of its implementations. Moreover, the presence of redundant calculations significantly limits the actual efficiency even for small size problems, which are not yet affected by instability.

Due to its numerical instability, the algorithm is not used in practice. According to the original publication [3], it was designed to replace the more popular cyclic reduction. However, on that score, the algorithm has failed.

2.3 Run results

2.4 Conclusions for different classes of computer architecture

Even in the range of reasonable round-off errors (small size problems), an efficient implementation of the method is impossible. A faster approach would be to solve a SLAE on a common PC using the Thomas algorithm.

3 References

- ↑ Voevodin V.V. Computational foundations of linear algebra Moscow: Nauka, 1977.

- ↑ Voevodin V.V., Kuznetsov Yu.A. Matrices and computations, Moscow: Nauka, 1984.

- ↑ 3.0 3.1 Stone H.S. An Efficient Parallel Algorithm for the Solution of a Tridiagonal Linear System of Equations // J. ACM, Vol. 20, No. 1 (Jan. 1973), P. 27-38.