Householder (reflections) method for reducing a symmetric matrix to tridiagonal form, locality

Contents

1 Links

The main fragment of the implementation, on the basis of which quantitative estimates were obtained, is given here.

2 Locality of data and computations

According to the graph shown in the figure, there is, unfortunately, a lot of data messaging in the algorithm. Inevitably, some of the arcs in the graph remain long, which negatively affects spatial locality.

2.1 Locality of implementation

2.1.1 Structure of memory access and a qualitative estimation of locality

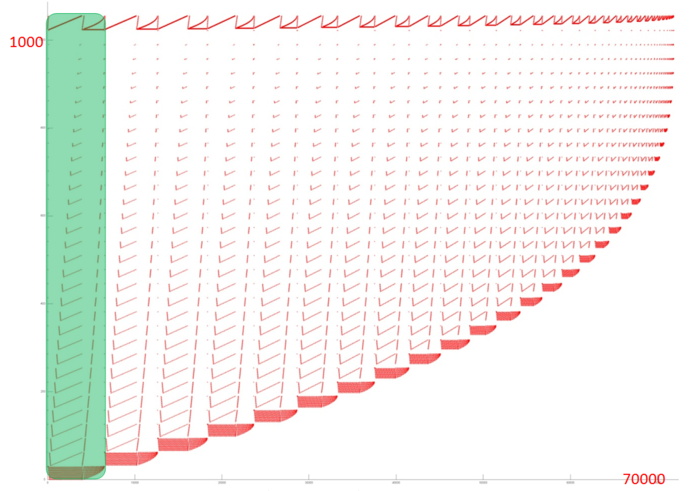

Fig.1 shows the memory access profile for an implementation of the Householder (reflection) method. This method reduces a symmetric matrix to tri-diagonal form. The profile obviously has an iterative structure, and, as one can see, its iterations are very similar to each other. The basic difference between iterations is the data set used. At each iteration step, several first elements are dropped from the analysis; that is, the greater the index of iteration, the less data are used. It is also seen that the number of memory accesses slightly decreases at each iteration step. It is sufficient to analyze one iteration in order to understand the overall profile. Let us consider the very first iteration step in more detail.

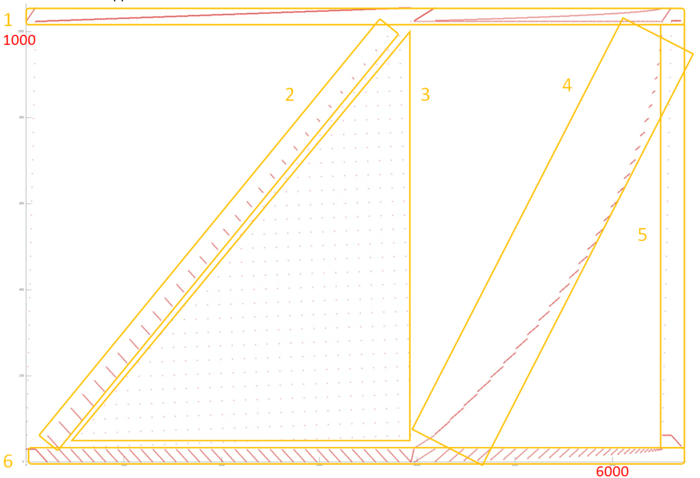

Fig.2 shows the memory access profile for the first iteration step (in fig.1, it is indicated in green). It can be partitioned into several fragments treated separately. The structure of fragments 2-5 can be understood from the overall graph of the iteration. Fragment 2 is a collection of serial searches performed with a small step in the reverse order. The number of elements inside a search gradually decreases. Such a structure is characterized by a high spatial locality because closely spaced data are often accessed. On the other hand, the temporal locality is low since the data are not used repeatedly. The same is true of fragment 4, whose main difference from fragment 2 is that the searches are done in the direct order. Note that a certain deformation of the former compared with the latter is caused not by the structure of this fragment but by the different number of accesses performed in parallel (for instance, see the right area of fragment 6).

Fragments 3 and 5 consist of a small number of accesses to data located far from each other. Such fragments are characterized by a low spatial and temporal locality.

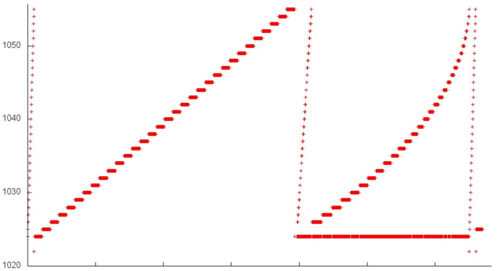

A more detailed examination is needed for the remaining fragments. Let us inspect fragment 1, which is as a whole shown in Fig.3. Here, we can see several stages; each is a serial search through elements with memory step 1. In certain cases, the data are many times used repeatedly (this is especially noticeable in the lower right area of the figure, where a number of accesses to the same element are performed). If fragment 1 only involves about 40 elements, we can say that this set of accesses has a high spatial and temporal locality.

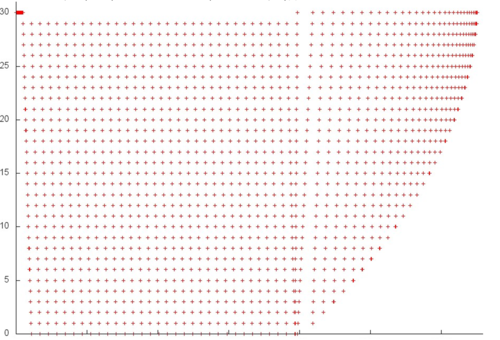

Now, we consider fragment 6 in detail (see fig.4). Here, we see that repeated accesses to data are performed after certain intervals rather than in succession. However, the number of elements involved is again very small; therefore, in this case as well, the locality (both spatial and temporal) is high.

On the whole, it can be said that this iteration step has a fairly high spatial and temporal locality. Since other steps have a similar construction, this conclusion can be extended to the overall memory access profile.

2.1.2 Quantitative estimation of locality

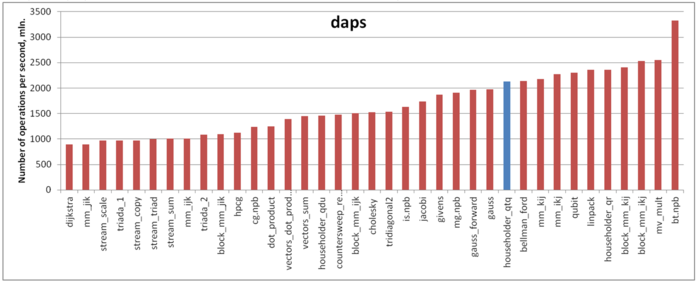

The estimate is based on daps, which assesses the number of memory accesses (reads and writes) per second. Similar to flops, daps is used to evaluate memory access performance rather than locality. Yet, it is a good source of information, particularly for comparison with the results provided by the estimate cvg.

Fig.5 shows daps values for implementations of popular algorithms, sorted in ascending order (the higher the daps, the better the performance in general). It is seen that, here, the performance of memory interaction is sufficiently high. Indeed, the daps value is only slightly smaller than that for the Linpack benchmark. The latter has a fairly high performance, which is slightly higher than that for implementations of the Jacobi method or Gaussian elimination.