Bellman-Ford, scalability

Primary author of this description: .

Contents

1 Links

The experiments were conducted with the Bellman-Ford algorithm implemented for CPU.

2 Locality of data and computations

2.1 Locality of implementation

2.1.1 Structure of memory access and a qualitative estimation of locality

2.1.2 Quantitative estimation of locality

3 Scalability of the algorithm and its implementations

3.1 Scalability of the algorithm

The Bellman-Ford algorithm has a considerable scalability potential because each arc is processed independently of the others, and each computational process can be assigned its own portion of graph arcs. The bottleneck is the access to the distance array shared by all the processes. The algorithm permits to relax requirements to the synchronization of the data in this array between the processes (a process may not immediately see the new value of a distance written by some other process). This possibly can be achieved by performing a greater number of global iterations.

3.2 Scalability of of the algorithm implementation

Let us study scalability for the parallel implementation of the Bellman-Ford algorithm in accordance with Scalability methodology. This study was conducted using the Lomonosov-2 supercomputer of the Moscow University Supercomputing Center.

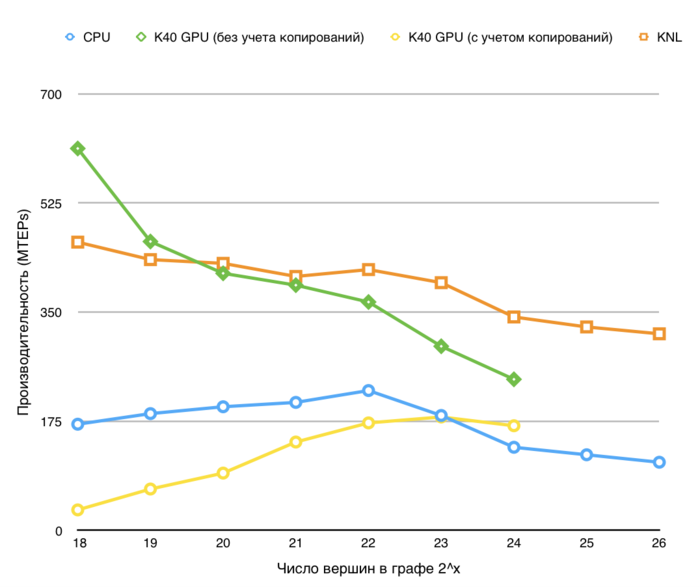

Variable parameters for the start-up of the algorithm implementation and the limits of parameter variations:

- number of processors [1 : 28] with the step 1;

- graph size [2^20 : 2^27].

The performance is defined as TEPS (an abbreviation for Traversed Edges Per Second), that is, the number of graph arcs processed by the algorithm in a second. Using this characteristic, one can compare the performance for graphs of different sizes and estimate how the performance gets worse when the graph size increases.

4 Dynamic characteristics and efficiency of the algorithm implementation

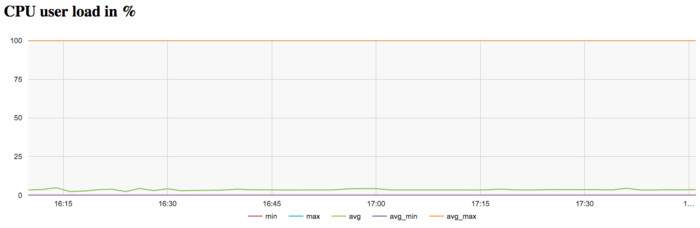

All the results were obtained with the «Lomonosov-2» supercomputer. We used Intel Xeon E5-2697v3 processors. The problem was solved for a large graph (of size 2^27) on a single node. Only one iteration was performed. The figures below illustrate the efficiency of this implementation.

The graph of CPU loading shows that the processor is idle almost all the time: the average level of loading is about 5 percent. This is a fairly inefficient result even for programs started with the use of only one core.

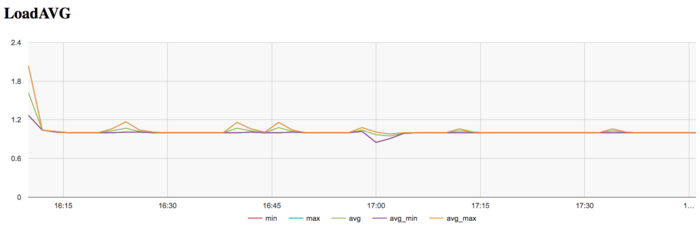

The graph of the number of processes expecting the beginning of the calculation stage (Loadavg) shows that the value of this parameter in the course of the program execution is the constant 1. This indicates that the hardware resources are all the time loaded by at most one process. Such a small number points to a not very reasonable use of resources.

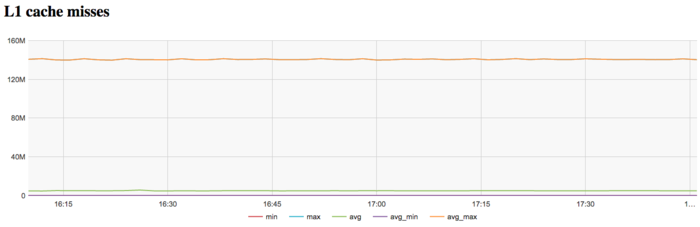

The graph of L1 cache misses shows that the number of misses is very large. It is on the level of 140 millions per second, which is a very high value indicating a potential cause of inefficiency.

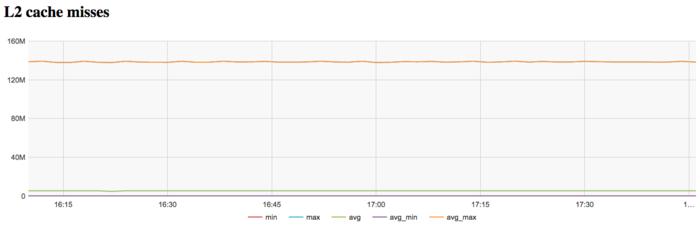

The graph of L2 cache misses shows that the number of such misses is also very large. It is on the level of 140 millions per second, which indicates an extremely inefficient memory interaction.

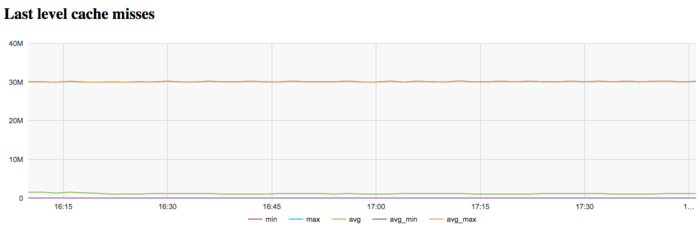

The graph of L3 cache misses shows that the number of these misses is again large; it is about 30 millions per second. This indicates that the problem fits very badly into cache memory, and the program is compelled to work all the time with RAM, which is explained by the very large size of the input graph.

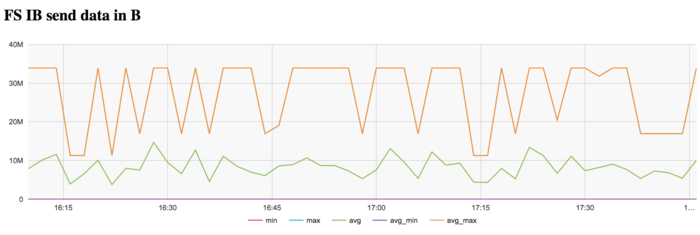

The graph of the data rate through Infiniband network shows a fairly high intensity of using this network at the first stage. This is explained by the program logic, which assumes the reading of the graph from a disc file. On Lomonosov-2, communications with this disc are performed through a dedicated Infiniband network.

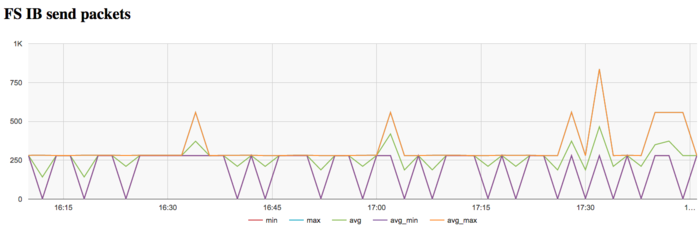

The graph of the data rate measured in packets per second demonstrates a similar picture of very high intensity at the first stage of executing the problem. Later on, the network is almost not used.

On the whole, the data of system monitoring make it possible to conclude that the program worked with a stable intensity. However, it used the memory very inefficiently due to the extremely large size of the graph.