LU decomposition via Gaussian elimination, locality

Primary authors of this description: Vad.V.Voevodin (Section 3).

Contents

1 Links

The key implementation fragment which was used to obtain a quantitative assessment is presented here (Kernel1 function).

2 Locality of data and computations

2.1 Locality of implementation

2.1.1 Structure of memory access and a qualitative estimation of locality

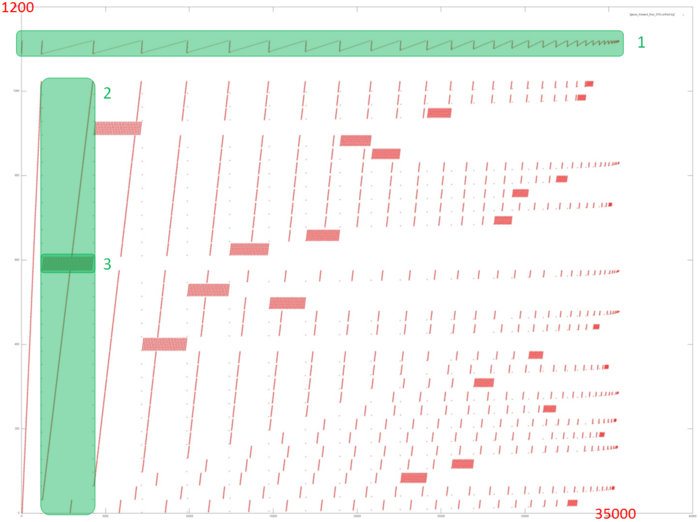

Fig.1 shows the memory call profile for the forward Gaussian decomposition of a system of linear equations. Like the source code, the figure shows that the program involves two primary arrays of data: calls to elements of the first array are marked in green (Fragment 1); all other calls are to elements of the second array. It is clear that the program has a complex profile. The main conclusion that can be made from this figure is the iterative nature of the profile, where each subsequent iteration contains fewer calls. This is particularly clear from Fragment 1.

Next, as you know, the Gaussian method discards one line from the linear system of equations with each iteration. This is also clear from Fig.1: each iteration (one of which is selected as Fragment 2) consists of a series of memory calls related to the line being discarded (Fragment 3), after which these elements are no longer addressed. It can also be seen that fragments similar to Fragment 3 are becoming smaller with each subsequent iteration, both in terms of the number of calls and number of elements addressed.

However, this general picture is very difficult for providing a qualitative assessment of data locality. Let’s move to a more detailed view of Fragment 1, which corresponds to the access to one of the two data arrays in memory.

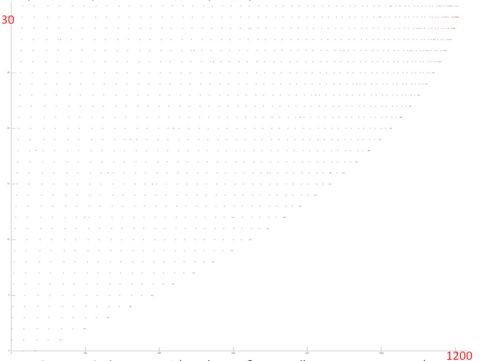

Fig.2 shows a detailed view of Fragment 1, highlighted in green in Fig.1. The picture looks curious: based on Fig.1, the impression was that this profile consisted of two different cycles of iterations, with the first cycle iterations being substantially shorter than second cycle iterations. In fact, Fig.2 shows that the profile consists of the same type of iterations, reading the array elements sequentially. With each subsequent iteration, the element with the smallest index is discarded. The illusion of two different cycles is created solely by the number of calls to the other array – when the number of such calls is high, the iteration for this fragment looks longer. Fragment 1 (as shown in Fig.2) has only about 1,200 memory calls, while the entire profile has 34,000 calls. That is, the first array accounts for just 3.5% of all memory calls in the program.

Since this profile is based on a sequential search through the array elements, we can say the fragment has high spatial locality. And since there are many iterations searching through array elements, it is clear that temporal locality is also high.

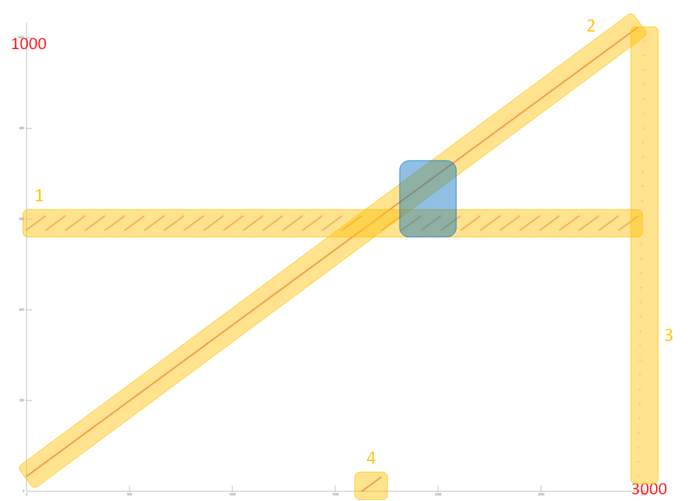

Now let’s review the memory call profile for the second array, which is substantially larger and more complex than the first profile. Fig.3 shows a detailed view of Fragment 2 as highlighted in Fig.1. This fragment consists of four parts, highlighted in orange.

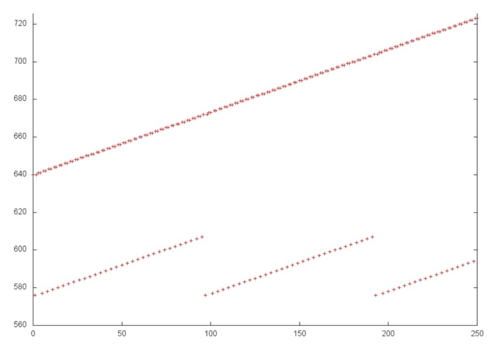

Part 1 looks like a cyclic sequential search through a small part of the array elements, and a closer look shows this is indeed the case. Fig.4 shows a close-up of Fragment 2 which is highlighted in blue in Fig.3. This close-up also shows that Part 2 is nearly identical to a sequential search of all elements of this array. Thus, both parts have very high spatial locality (due to the sequential search). Part 1 also has high temporal locality, as the search is repeated in a cycle, unlike part 2, where each element is only addressed once.

Part 3 consists of only a few memory calls, and represents a sequential search of all array elements with a very large interval – much larger than in Part 2. In this case, due to the high interval, spatial locality is low and there is no temporal locality either as the search is only done once.

Part 4 represents another sequential search through a small part of the array, the size of which equals the number of discarded array elements; the closer to the end of the profile, the more memory calls are there in part 4. This part has the same locality properties as Part 2. The only difference is in the size, but it does not affect the locality much.

When viewing Fragment 2 in general, the main impact comes from parts 1 and 2; therefore we can say this fragment has high temporal and spatial locality. However, with subsequent iterations (see Fig.1) locality will generally be decreasing as each iteration discards several elements.

2.1.2 Quantitative estimation of locality

Launch conditions are described here.

The first estimate is based on daps, which assesses the number of memory access operations (reads and writes) per second. Similar to flops, this tool is used to evaluate memory access performance, rather than locality. But it is still a good source of information, particularly in combination with the next estimate – cvg.

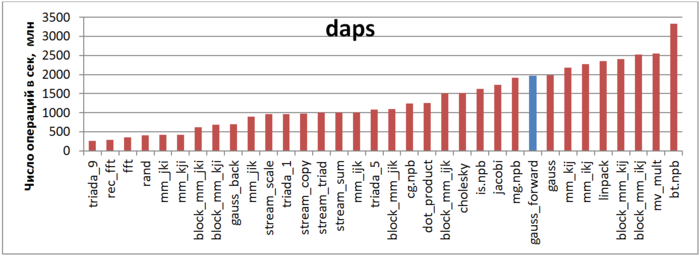

Fig.5 shows the daps values for various implementations of common algorithms sorted in ascending order (larger daps values generally mean higher performance). You can see that the blue column, which corresponds to the Forward Guassian method, is located in the right part of the chart indicating high performance, a little lower than the Linpak test performance. It should also be noted that the daps value for this task is almost equal to the daps value for the entire Gaussian method (“gauss” column), which combines the forward and reverse methods. This is due to the fact that reverse method has a much lower number of memory calls than forward method, so its impact is limited.

The second tool – cvg – is intended to obtain a more machine-independent locality assessment. It determines how often a program needs to pull data to cache memory. Respectively, the smaller the cvg value, the less frequently data needs to be called to cache, and the better the locality.

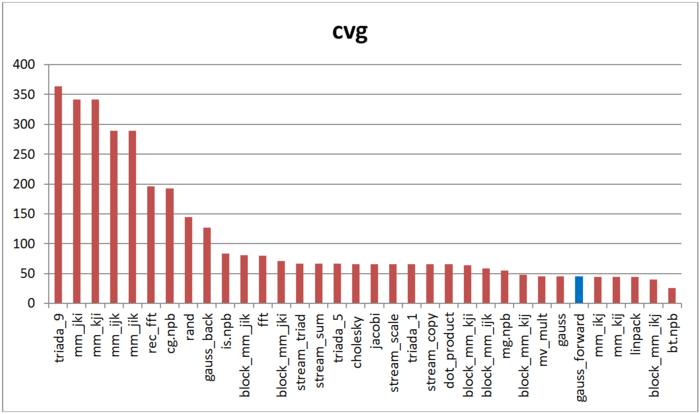

Fig.6 shows cvg values for the same set of implementations, sorted in descending order (lower cvg generally means higher locality). You can see that according to this assessment, just as with daps, the forward Gaussian method has high locality. The Linpak test offers comparable results as well. Interestingly, the ranking for the forward method and a combination of the forward and reverse methods together (“gauss” column) are almost the same.